Diferencia entre revisiones de «WRF4L»

(Rellenando la página de WRF4G II) |

Sin resumen de edición |

||

| (No se muestran 25 ediciones intermedias de 2 usuarios) | |||

| Línea 1: | Línea 1: | ||

There is a far more powerful tool to manage work-flow of [http://www.meteo.unican.es/software/wrf4g/ WRF4G]. | There is a far more powerful tool to manage work-flow of [http://www.meteo.unican.es/software/wrf4g/ WRF4G]. | ||

Lluís expanded this work to other HPCs and models. See more details in this GIT repository [https://git.cima.fcen.uba.ar/lluis.fita/workflows/-/wikis/home WRFL] | |||

Lluís developed a less powerful one which is here described | Lluís developed a less powerful one which is here described | ||

WRF work-flow management is done via | WRF work-flow management is done via multiple scripts (these are the specifics for hydra [CIMA cluster]): | ||

* <code>EXPERIMENTparameters.txt</code>: General ASCII file which configures the experiment and chain of simulations (chunks). This is the unique file to modify | * <code>EXPERIMENTparameters.txt</code>: General ASCII file which configures the experiment and chain of simulations (chunks). This is the unique file to modify | ||

* <code> | * <code>launch_experiment.pbs</code>: PBS-queue job which prepares the experiment of the environment | ||

* <code> | * <code>wrf4l_prepare_WPS.pbs</code>: PBS-queue job which prepares the WPS section of the model: <code>ungrib.exe</code>, <code>metgrid.exe</code>, <code>real.exe</code> | ||

* There is a folder called <code>components</code> with shell and python scripts necessary for the work-flow management | * <code>wrf4l_prepare_WRF.pbs</code>: PBS-queue job which prepares the WRF section of the model: <code>wrf.exe</code> | ||

* <code>wrf4l_prepare_nc2wps.pbs</code>: PBS-queue job which prepare the execution of nc2wps for the chunk | |||

* <code>wrf4l_prepare_ungrib.pbs</code>: PBS-queue job which prepare the execution of ungrib for the chunk | |||

* <code>wrf4l_launch_nc2wps.pbs</code>: PBS-queue job which launches nc2wps: <code>netcdf2wps</code> | |||

* <code>wrf4l_launch_ungrib.pbs</code>: PBS-queue job which launches ungrib: <code>ungrib.exe</code> | |||

* <code>wrf4l_launch_metgrid.pbs</code>: PBS-queue job which launches metgrid: <code>metgrid.exe</code> | |||

* <code>wrf4l_launch_real.pbs</code>: PBS-queue job which launches real: <code>real.exe</code> | |||

* <code>wrf4l_launch_wrf.pbs</code>: PBS-queue job which launches wrf: <code>wrf.exe</code> | |||

* <code>wrf4l_prepare-next_nc2wps.pbs</code>: PBS-queue job which prepare the execution of nc2wps for the next chunk | |||

* <code>wrf4l_prepare-next_ungrib.pbs</code>: PBS-queue job which prepare the execution of ungrib for the next chunk | |||

* <code>wrf4l_finishin_simulation.pbs</code>: PBS-queue job which ends the simulation of the chunk | |||

These PBS jobs are executed consecutively and they are put on hold until the precedent step is finished. | |||

There is a folder called <code>/share/tools/workflows/components</code> with shell and python scripts necessary for the work-flow management | |||

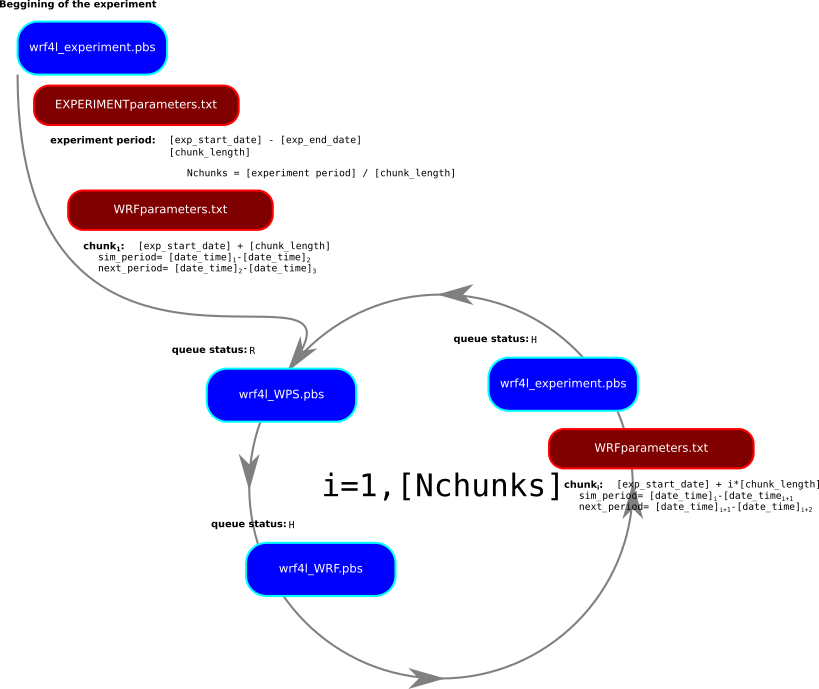

An experiment which contains a period of simulation is divided by '''chunks''' small pieces of times which are manageable by the model. The work-flow follows these steps using <code>run_experiments.pbs</code>: | An experiment which contains a period of simulation is divided by '''chunks''' small pieces of times which are manageable by the model. The work-flow follows these steps using <code>run_experiments.pbs</code>: | ||

# Copy and link all the required files for a given '''chunk''' of the whole period of simulation following the content of <code>EXPERIMENTparameters.txt</code> | # Copy and link all the required files for a given '''chunk''' of the whole period of simulation following the content of <code>EXPERIMENTparameters.txt</code> | ||

[[File:WRF4L_resize.png]] | |||

All the scripts are located in <code>hydra</code> at: | All the scripts are located in <code>hydra</code> at: | ||

<pre> | <pre> | ||

/share/tools/ | /share/tools/workflows/WRF4L/hydra | ||

</pre> | </pre> | ||

== How to simulate == | == How to simulate == | ||

# Creation of a new folder from where launch the experiment [ExperimentName] (g.e. somewhere at | # Assuming that we have already defined the domains of simulation and that we have a suited <CODE>namelist.wps</CODE> and <CODE>namelist.input</CODE> WRF namelist files in a folder called <CODE>$GEOGRID</CODE>. We will gather all this information in the storage folder for the experiment (let's assume called $STORAGEdir) and we create there a folder for the information of the domains. | ||

<PRE style"shell"> | |||

mkdir ${STORAGEdir}/domains | |||

cp ${GEOGRID}/geo_em.d* ${STORAGEdir}/domains | |||

cp ${GEOGRID}/namelist.wps ${STORAGEdir}/domains | |||

cp ${GEOGRID}/namelist.input ${STORAGEdir}/domains | |||

</PRE> | |||

# We then move to the <CODE>${STORAGEdir}/domains</CODE> and create a template file for the <CODE>namelist.wps</CODE> | |||

<PRE style="shell"> | |||

cd ${STORAGEdir}/domains | |||

cp namelist.wps namelist.wps_template | |||

diff namelist.wps namelist.wps_template | |||

4,6c4,6 | |||

< start_date = '2010-06-01_00:00:00', '2010-06-01_00:00:00' | |||

< end_date = '2010-06-16_00:00:00', '2010-06-16_00:00:00' | |||

< interval_seconds = 10800, | |||

--- | |||

> start_date = 'iyr-imo-ida_iho:imi:ise', 'iyr-imo-ida_iho:imi:ise', | |||

> end_date = 'eyr-emo-eda_eho:emi:ese', 'eyr-emo-eda_eho:emi:ese', | |||

> interval_seconds = infreq | |||

</PRE> | |||

# In case we are going to use <CODE>nc2wps</CODE> to generate the intermediate files (<CODE>ungrib.exe</CODE> output directly generated from netCDF files), we need to generate a template for the <CODE>namelist.nc2wps</CODE>. There are different options available in <CODE>/share/tools/workflows/WRF4L/hydra</CODE>. In this example we use the one prepared for ERA5 data as it is kept in Papa-Deimos: | |||

<PRE style="shell"> | |||

cp /share/tools/workflows/WRF4L/hydra/namelist.nc2wps_template_ERA5 ${STORAGEdir}/domains/namelist.nc2wps_template | |||

</PRE> | |||

# Creation of a new folder from where launch the experiment [ExperimentName] (g.e. somewhere at <CODE>salidas/</CODE> folder) | |||

<pre> | <pre> | ||

$ mkdir [ExperimentName] | $ mkdir salidas/[ExperimentName] | ||

cd [ExperimentName] | cd salidas/[ExperimentName] | ||

</pre> | </pre> | ||

# copy | # copy essential WRF4L files to this folder | ||

<pre> | <pre> | ||

cp /share/tools/workflows/WRF4L/hydra/EXPERIMENTparameters.txt ./ | |||

cp /share/tools/workflows/WRF4L/hydra/launch_experiment.pbs ./ | |||

</pre> | </pre> | ||

# Edit the configuration/set-up of the simulation of the experiment | # Edit the configuration/set-up of the simulation of the experiment (e.g. period of simulation, version of WPS and WRF to use, additional <CODE>namelist.input</CODE> parameters, multiple folders: <CODE>storageHOME</CODE>, <CODE>runHOME</CODE>, MPI configuration, ...) | ||

<pre> | <pre> | ||

$ vim EXPERIMENTparameters.txt | $ vim EXPERIMENTparameters.txt | ||

</pre> | </pre> | ||

# | # Change the name of the user in the <CODE>launch_experiment.pbs</CODE> | ||

<PRE style="shell"> | |||

diff launch_experiment.pbs /share/tools/workflows/WRF4L/hydra/launch_experiment.pbs | |||

11c11 | |||

< #PBS -M lluis.fita@cima.fcen.uba.ar | |||

--- | |||

> #PBS -M [user]@cima.fcen.uba.ar | |||

</PRE> | |||

# Launch the experiment | |||

<pre> | <pre> | ||

$ qsub | $ qsub launch_experiment.pbs | ||

</pre> | </pre> | ||

When it is running one would have (runnig | |||

When it is running one would have multiple jobs, see for example for an experiment called 'MPIsimplest' and it simulation labelled 'urban' (finished `C', runnig `R', and hold `H'): | |||

<pre> | <pre> | ||

$ qstat -u $USER | $ qstat -u $USER | ||

| Línea 49: | Línea 99: | ||

Job ID Username Queue Jobname SessID NDS TSK Memory Time S Time | Job ID Username Queue Jobname SessID NDS TSK Memory Time S Time | ||

-------------------- -------- -------- ---------------- ------ ----- --- ------ ----- - ----- | -------------------- -------- -------- ---------------- ------ ----- --- ------ ----- - ----- | ||

72507.hydra experiment_relay lluis.fita 00:00:07 C larga | |||

72508.hydra ...implest-urban lluis.fita 00:00:05 C larga | |||

72509.hydra ...implest-urban lluis.fita 00:00:05 C larga | |||

72510.hydra ...implest-urban lluis.fita 00:00:08 C larga | |||

72511.hydra ...implest-urban lluis.fita 0 R larga | |||

72512.hydra ...implest-urban lluis.fita 0 H larga | |||

72513.hydra ...implest-urban lluis.fita 0 H larga | |||

72514.hydra ...implest-urban lluis.fita 0 H larga | |||

72515.hydra ...implest-urban lluis.fita 0 H larga | |||

72516.hydra ...implest-urban lluis.fita 0 H larga | |||

72518.hydra ...implest-urban lluis.fita 0 H larga | |||

72519.hydra experiment_relay lluis.fita 0 H larga | |||

</pre> | </pre> | ||

In case of crash of the simulation, after fixing the issue, go to <code>[ | In case of crash of the simulation, after fixing the issue, go to <code>[expHOME]/[ExpName]/[SimName]</code> and re-launch the experiment (after the first run the <code>scratch</code> is switched automatically to `false') | ||

<pre> | <pre> | ||

$ qsub | $ qsub launch_experiment.pbs | ||

</pre> | </pre> | ||

== Checking the experiment == | == Checking the experiment == | ||

Once the experiment runs, one needs to look on (following name of the variables from <code>EXPERIMENTparameters.txt</code> | Once the experiment runs, one needs to look on (following name of the variables from <code>EXPERIMENTparameters.txt</code> | ||

* <code>[runHOME]/[ExpName]/[SimName]</code>: Will content the copies of the templates <code>namelist.wps</code>, <code>namelist.input</code> and | * <code>[runHOME]/[ExpName]/[SimName]</code>: Will content the copies of the templates <code>namelist.wps</code>, <code>namelist.input</code> and files: | ||

* <code> | ** <code>chunk_attemps.inf</code> which counts how many times a '''chunk''' has been attempted to be run (if it reached 4 times, the <code>WRF4L</code> is stopped) | ||

* <code>[runHOME]/[ExpName]/[SimName]/ | ** <code>chunk_nums.inf</code> which keeps track of the jobs and periods used for each step for a given '''chunk''' | ||

* <code>[ | * <code>[runHOME]/[ExpName]/[SimName]/ungrib</code>: actual folder where the computing nodes run ungrib (either ungrib.exe or netcdf2wps) | ||

* <code>[runHOME]/[ExpName]/[SimName]/metgrid</code>: actual folder where the computing nodes run metgrd (metgrid.exe) | |||

* <code>[runHOME]/[ExpName]/[SimName]/run</code>: actual folder where the computing nodes run the model (real.exe and wrf.exe) | |||

* <code>[runHOME]/[ExpName]/[SimName]/run/outreal/</code>: folder with the output of real.exe | |||

* <code>[storageHOME]/[ExpName]/[SimName]/outwrf/</code>: folder with the output of wrf.exe | |||

'''NOTE:''' ungrib, metgrid and real outputs are not kept in the storage folder | |||

=== When something went wrong === | === When something went wrong === | ||

If there has been any problem check the last chunk | If there has been any problem check the last chunk to try to understand what happens and where the problem comes from: | ||

* <code> | * <code>rsl.[error/out].[nnnn]</code>: These are the files which content the standard output while running the model. One file for each process. If the problem was something related to model execution and it has been prepared for the error, a correct message must appear. (look first for the largest files... | ||

<pre> | <pre> | ||

$ ls -lrS | $ ls -lrS rsl.error.* | ||

</pre> | </pre> | ||

* <code> | * <code>run_wrf.log</code>: These are the files which content the standard output of the model. Search for `segmentation faults' in form of (it might differ): | ||

<pre>forrtl: error (63): output conversion error, unit -5, file Internal Formatted Write | <pre>forrtl: error (63): output conversion error, unit -5, file Internal Formatted Write | ||

Image PC Routine Line Source | Image PC Routine Line Source | ||

wrf.exe 00000000032B736A Unknown Unknown Unknown | |||

wrf.exe 00000000032B5EE5 Unknown Unknown Unknown | |||

wrf.exe 0000000003265966 Unknown Unknown Unknown | |||

wrf.exe 0000000003226EB5 Unknown Unknown Unknown | |||

wrf.exe 0000000003226671 Unknown Unknown Unknown | |||

wrf.exe 000000000324BC3C Unknown Unknown Unknown | |||

wrf.exe 0000000003249C94 Unknown Unknown Unknown | |||

wrf.exe 00000000004184DC Unknown Unknown Unknown | |||

libc.so.6 000000319021ECDD Unknown Unknown Unknown | libc.so.6 000000319021ECDD Unknown Unknown Unknown | ||

wrf.exe 00000000004183D9 Unknown Unknown Unknown | |||

</pre> | </pre> | ||

* | * in <code>[expHOME]/[SimName]</code>, check the output of the PBS jobs. Which are called: | ||

** <code> | ** <code>experiment_relay.o[nnnn]</code>: output of the <code>launch_experiment.pbs</code> | ||

** <code> | ** <code>prepare_wps_[ExpName]-[SimName].o[nnnn]</code>: output of the <code>wrf4l_prepare_WPS.pbs</code> | ||

* | ** <code>prepare_wrf_[ExpName]-[SimName].o[nnnn]</code>: output of the <code>wrf4l_prepare_WRF.pbs</code> | ||

** <code>prep_ncwps_[ExpName]-[SimName].o[nnnn]</code>: output of the <code>wrf4l_prepare_nc2wps.pbs</code> | |||

** <code>prep_ungrib_[ExpName]-[SimName].o[nnnn]</code>: output of the <code>wrf4l_prepare_ungrib.pbs</code> | |||

** <code>prep-nxt_ncwps_[ExpName]-[SimName].o[nnnn]</code>: output of the <code>wrf4l_prepare_nc2wps.pbs</code> for the following chunk | |||

** <code>prep-nxt_ungrib_[ExpName]-[SimName].o[nnnn]</code>: output of the <code>wrf4l_prepare_ungrib.pbs</code> for the following chunk | |||

** <code>finish_sim_[ExpName]-[SimName].o[nnnn]</code>: output of the <code>wrf4l_finishing_simulation.pbs</code> | |||

* in <code>[runHOME]/[SimName]</code>, check the output of the PBS jobs. Which are called: | |||

** <code>ungrib/nc2wps_[ExpName]-[SimName].o[nnnn]</code>: output of the <code>wrf4l_launch_nc2wps.pbs</code> | |||

** <code>ungrib/ungrib_[ExpName]-[SimName].o[nnnn]</code>: output of the <code>wrf4l_launch_ungrib.pbs</code> | |||

** <code>metgrid/metgrid_[ExpName]-[SimName].o[nnnn]</code>: output of the <code>wrf4l_launch_metgrid.pbs</code> | |||

** <code>run/wrf_[ExpName]-[SimName].o[nnnn]</code>: output of the <code>wrf4l_launch_real.pbs</code> | |||

** <code>run/wrf_[ExpName]-[SimName].o[nnnn]</code>: output of the <code>wrf4l_launch_wrf.pbs</code> | |||

All the logs and job outputs are kept in <CODE>${storageHOME}/[ExpName]/[SimName]/joblogs</CODE> with a compressed file for ecah chunk and attemp as <CODE>${chunk_period}-${Nattempts}_joblogs.tar</CODE> | |||

== EXPERIMENTSparameters.txt == | == EXPERIMENTSparameters.txt == | ||

This ASCII file configures all the simulation. It assumes: | This ASCII file configures all the simulation. It assumes: | ||

* Required files, forcings, storage, compiled version of the code might be at different machines. | * Required files, forcings, storage, compiled version of the code might be at different machines. | ||

* There is a folder with a given template version of the <code> | * There is a folder with a given template version of the <code>namelist.input</code> which will be used and changed accordingly to the requirement of the experiments | ||

Location of the WRF4L main folder (example for <code>hydra</code>) | |||

<pre> | |||

# Home of the WRF4L | |||

wrf4lHOME=/share/tools/workflows/WRF4L | |||

</pre> | |||

Name of the machine where the experiment is running ('''NOTE:''' a folder with specific work-flow must exist as <code>$wrf4lHOME/${HPC}</code>) | |||

<pre> | |||

# Machine specific work-flow files for $HPC (a folder with specific work-flow must exist as $wrf4lHOME/${HPC}) | |||

HPC=hydra | |||

</pre> | |||

Name of the compiler used to compile the model ('''NOTE:''' a file called <code>$wrf4lHOME/arch/${HPC}_${compiler}.env</code> must exist) | |||

<pre> | |||

# Compilation (a file called $wrf4lHOME/arch/${HPC}_${compiler}.env must exist) | |||

compiler=intel | |||

</pre> | |||

Name of the experiment | Name of the experiment | ||

<pre> | <pre> | ||

# Experiment name | # Experiment name | ||

ExpName = | ExpName = WRFsensSFC | ||

</pre> | </pre> | ||

| Línea 111: | Línea 201: | ||

<pre> | <pre> | ||

# Simulation name | # Simulation name | ||

SimName = | SimName = control | ||

</pre> | </pre> | ||

| Línea 129: | Línea 213: | ||

<pre> | <pre> | ||

# Experiment starting date | # Experiment starting date | ||

exp_start_date = | exp_start_date = 19790101000000 | ||

# Experiment ending date | # Experiment ending date | ||

exp_end_date = 20150101000000 | exp_end_date = 20150101000000 | ||

</pre> | </pre> | ||

Length of the chunks ( | Length of the chunks (do not make chunks larger than 1-month!!) | ||

<pre> | <pre> | ||

# Chunk Length [N]@[unit] | # Chunk Length [N]@[unit] | ||

# [unit]=[year, month, week, day, hour, minute, second] | # [unit]=[year, month, week, day, hour, minute, second] | ||

chunk_length = 1@ | chunk_length = 1@month | ||

</pre> | </pre> | ||

| Línea 144: | Línea 228: | ||

* '''NOTE:''' this will only work if one set-up the <code>.ssh</code> public/private keys in each involved USER/HOST. | * '''NOTE:''' this will only work if one set-up the <code>.ssh</code> public/private keys in each involved USER/HOST. | ||

* '''NOTE 2:''' All the forcings, compiled code, ... are already at <code>hydra</code> at the common space called <code>share</code> | * '''NOTE 2:''' All the forcings, compiled code, ... are already at <code>hydra</code> at the common space called <code>share</code> | ||

* '''NOTE 3:''' From the computing nodes, one can not access to the <code>/share</code> folder and to any of the CIMA's storage machines: skogul, freyja, ... For that reason, one need to use these system of <code>[USER]@[HOST]</code> accounts. <code>*.pbs</code> scripts uses a series of wrappers of the standard functions: <code>cp, ln, ls, mv, ....</code> which manage them `from' and `to' different pairs of <code>[USER]@[HOST]</code> | * '''NOTE 3:''' From the computing nodes, one can not access to the <code>/share</code> folder and to any of the CIMA's storage machines: skogul, freyja, ... For that reason, one need to use these system of <code>[USER]@[HOST]</code> accounts. <code>*.pbs</code> scripts uses a series of wrappers of the standard functions: <code>cp, ln, ls, mv, ....</code> which manage them `from' and `to' different pairs of <code>[USER]@[HOST]</code>. '''NOTE:''' This will only work if the public/private ssh key pairs have been set-up (see more details at [[llaves_ssh]]) | ||

<pre> | <pre> | ||

# Hosts | # Hosts | ||

| Línea 159: | Línea 243: | ||

</pre> | </pre> | ||

Templates of the configuration of | Templates of the configuration of WRF: <code>namelist.wps</code>, <code>namelist.input</code> files. '''NOTE:''' they will be changed according to the content of <code>EXPERIMENTparameters.txt</code> like period of the '''chunk''', atmospheric forcing, differences of the set-up, ... (located in the <code>[codeHOST]</code> | ||

<pre> | <pre> | ||

# Folder with the ` | # Folder with the `namelist.wps', `namelist.input' and `geo_em.d[nn].nc' of the experiment | ||

domainHOME = /home/lluis.fita/salidas/estudios/ | domainHOME = /home/lluis.fita/salidas/EXPS/estudios/dominmios/SA50k | ||

</pre> | </pre> | ||

Folder where the | Folder where the workflow will be launched (on top of that there will be one more [SimName]) | ||

<pre> | |||

# Experiment folder | |||

expHOME=/home/lluis.fita/salidas/estudios/MPIsimplest/exp | |||

</PRE> | |||

Folder where the WRF model will run in the computing nodes (on top of that there will be two folders [ExpName]/[SimName]). WRF will run at the folder [SimName]/run | |||

<pre> | <pre> | ||

# Running folder | # Running folder | ||

runHOME = /home/lluis.fita/ | runHOME = /home/lluis.fita/salidas/RUNNING | ||

</pre> | |||

Folder with the compiled version of the WPS (located at <code>[codeHOST]</code>) | |||

<pre> | |||

# Folder with the compiled source of WPS | |||

wpsHOME = /opt/wrf/WRF-4.5/intel/2021.4.0/dmpar/WPS | |||

</pre> | </pre> | ||

Folder with the compiled version of the | Folder with the compiled version of the WRF (located at <code>[codeHOST]</code>) | ||

<pre> | <pre> | ||

# Folder with the compiled source of | # Folder with the compiled source of WRF | ||

wrfHOME = /share/WRF/WRFV3.9.1/ifort/dmpar/WRFV3 | |||

</pre> | </pre> | ||

Folder to storage all the output of the model (history files, restarts and compressed file with content of the configuration and the standard output of the given run). The content of the folder will be organized by chunks (located at <code>[storageHOST]</code>) | Folder to storage all the output of the model (history files, restarts and compressed file with content of the configuration and the standard output of the given run). The content of the folder will be organized by chunks (located at <code>[storageHOST]</code>) with two folders called [ExpName]/[SimName]. | ||

<pre> | <pre> | ||

# Storage folder of the output | # Storage folder of the output | ||

storageHOME = /home/lluis.fita/salidas/ | storageHOME = /home/lluis.fita/salidas/EXPS | ||

</pre> | </pre> | ||

| Línea 187: | Línea 283: | ||

# Modules to load ('None' for any) | # Modules to load ('None' for any) | ||

modulesLOAD = None | modulesLOAD = None | ||

</pre> | </pre> | ||

| Línea 200: | Línea 288: | ||

<pre> | <pre> | ||

# Model reference output names (to be used as checking file names) | # Model reference output names (to be used as checking file names) | ||

nameLISTfile = | nameLISTfile = namelist.input # namelist | ||

nameRSTfile = | nameRSTfile = wrfrst_d01_ # restart file | ||

nameOUTfile = | nameOUTfile = wfrout_d01_ # output file | ||

</pre> | </pre> | ||

Extensions of the files | Extensions of the files with the configuration of WRF (to be retrieved from <code>codeHOST</code> and <code>domainHOME</code>) | ||

<pre> | <pre> | ||

# Extensions of the files with the configuration of the model | # Extensions of the files with the configuration of the model | ||

configEXTS = | configEXTS = wps:input | ||

</pre> | </pre> | ||

| Línea 233: | Línea 321: | ||

# `%j%': julian day in 3 digits | # `%j%': julian day in 3 digits | ||

# [tmpllinkname]: template name of the link of the restart file (if necessary with [NNNNN] variables to be substituted) | # [tmpllinkname]: template name of the link of the restart file (if necessary with [NNNNN] variables to be substituted) | ||

rstFILES= | rstFILES=wrfrst_d01_[YYYY]-[MM]-[DD]_[HH]:[MI]:[SS]|YYYY?%Y#MM?%m#DD?%d#HH?%H#MI?%M#SS?%S@wrfrst_d01_[YYYY]-[MM]-[DD]_[HH]:[MI]:[SS]|YYYY?%Y#MM?%m#DD?%d#HH?%H#MI?%M#SS?%S | ||

</pre> | </pre> | ||

Folder with the | Folder with the input data (located at <code>[forcingHOST]</code>). | ||

<pre> | <pre> | ||

# Folder with the input morphological forcing data | # Folder with the input morphological forcing data | ||

indataHOME = /share/ | indataHOME = /share/DATA/re-analysis/ERA-Interim | ||

</pre> | </pre> | ||

Format of the input data and name of files | |||

<pre> | <pre> | ||

# ' | # Data format (grib, nc) | ||

indataFMT= grib | |||

# For `grib' format | |||

# Head and tail of indata files names. | |||

# Assuming ${indataFheader}*[YYYY][MM]*${indataFtail}.[grib/nc] | |||

indataFheader=ERAI_ | |||

indataFtail= | |||

</pre> | </pre> | ||

In case of netCDF input data, there is a bash script which transforms the data to grib, to be used later by <code>ungrib</code> | |||

Variable table to use in <code>ungrib</code> | |||

<pre> | <pre> | ||

# | # Type of Vtable for ungrib as Vtable.[VtableType] | ||

VtableType=ERA-interim.pl | |||

</pre> | </pre> | ||

Folder with the atmospheric forcing data (located at <code>[forcingHOST]</code>). | |||

<pre> | <pre> | ||

## | # For `nc' format | ||

# Folder which contents the atmospheric data to generate the initial state | |||

iniatmosHOME = ./ | |||

# Type of atmospheric data to generate the initial state | |||

# `ECMWFstd': ECMWF 'standard' way ERAI_[pl/sfc][YYYY][MM]_[var1]-[var2].grib | |||

# `ERAI-IPSL': ECMWF ERA-INTERIM stored in the common IPSL way (.../4xdaily/[AN\_PL/AN\_SF]) | |||

iniatmosTYPE = 'ECMWFstd' | |||

</pre> | </pre> | ||

Here on can change values on the template <code> | Here on can change values on the template <code>namelist.input</code>. It will change the values of the provided parameters with a new value. If the given parameter is not in the template of the <code>namelist.input</code> it will be automatically added. | ||

<pre> | <pre> | ||

## | ## Namelist changes | ||

nlparameters = ra_sw_physics;4,ra_lw_physics;4,time_step;180 | |||

</pre> | </pre> | ||

Name of | Name of WRF's executable (to be localized at <code>[orHOME]</code> folder from <code>[codeHOST]</code>) | ||

<pre> | <pre> | ||

# Name of the exectuable | # Name of the exectuable | ||

nameEXEC= | nameEXEC=wrf.exe | ||

</pre> | </pre> | ||

':' separated list of netCDF file names from | ':' separated list of netCDF file names from WRF's output which do not need to be kept | ||

<pre> | <pre> | ||

# netCDF Files which will not be kept anywhere | # netCDF Files which will not be kept anywhere | ||

| Línea 289: | Línea 378: | ||

</pre> | </pre> | ||

':' separated list of headers of netCDF file names from | ':' separated list of headers of netCDF file names from WRF's output which need to be kept | ||

<pre> | <pre> | ||

# Headers of netCDF files need to be kept | # Headers of netCDF files need to be kept | ||

HkeptfileNAMES= | HkeptfileNAMES=wrfout_d:wrfxtrm_d:wrfpress_d:wrfcdx_d:wrfhfcdx_d | ||

</pre> | </pre> | ||

':' separated list of headers of restarts netCDF file names from | ':' separated list of headers of restarts netCDF file names from WRF's output which need to be kept | ||

<pre> | <pre> | ||

# Headers of netCDF restart files need to be kept | # Headers of netCDF restart files need to be kept | ||

HrstfileNAMES= | HrstfileNAMES=wrfrst_d | ||

</pre> | </pre> | ||

Parallel configuration of the run. | Parallel configuration of the run. | ||

<pre> | <pre> | ||

# | # WRF parallel run configuration | ||

## Number of nodes | ## Number of nodes | ||

Nnodes = 1 | Nnodes = 1 | ||

| Línea 318: | Línea 401: | ||

## Memory size of shared memory threads | ## Memory size of shared memory threads | ||

SIZEopenthreads = 200M | SIZEopenthreads = 200M | ||

## Memory for PBS jobs | |||

MEMjobs = 30gb | |||

</pre> | </pre> | ||

Revisión actual - 12:26 30 oct 2025

There is a far more powerful tool to manage work-flow of WRF4G.

Lluís expanded this work to other HPCs and models. See more details in this GIT repository WRFL

Lluís developed a less powerful one which is here described

WRF work-flow management is done via multiple scripts (these are the specifics for hydra [CIMA cluster]):

EXPERIMENTparameters.txt: General ASCII file which configures the experiment and chain of simulations (chunks). This is the unique file to modifylaunch_experiment.pbs: PBS-queue job which prepares the experiment of the environmentwrf4l_prepare_WPS.pbs: PBS-queue job which prepares the WPS section of the model:ungrib.exe,metgrid.exe,real.exewrf4l_prepare_WRF.pbs: PBS-queue job which prepares the WRF section of the model:wrf.exewrf4l_prepare_nc2wps.pbs: PBS-queue job which prepare the execution of nc2wps for the chunkwrf4l_prepare_ungrib.pbs: PBS-queue job which prepare the execution of ungrib for the chunkwrf4l_launch_nc2wps.pbs: PBS-queue job which launches nc2wps:netcdf2wpswrf4l_launch_ungrib.pbs: PBS-queue job which launches ungrib:ungrib.exewrf4l_launch_metgrid.pbs: PBS-queue job which launches metgrid:metgrid.exewrf4l_launch_real.pbs: PBS-queue job which launches real:real.exewrf4l_launch_wrf.pbs: PBS-queue job which launches wrf:wrf.exewrf4l_prepare-next_nc2wps.pbs: PBS-queue job which prepare the execution of nc2wps for the next chunkwrf4l_prepare-next_ungrib.pbs: PBS-queue job which prepare the execution of ungrib for the next chunkwrf4l_finishin_simulation.pbs: PBS-queue job which ends the simulation of the chunk

These PBS jobs are executed consecutively and they are put on hold until the precedent step is finished.

There is a folder called /share/tools/workflows/components with shell and python scripts necessary for the work-flow management

An experiment which contains a period of simulation is divided by chunks small pieces of times which are manageable by the model. The work-flow follows these steps using run_experiments.pbs:

- Copy and link all the required files for a given chunk of the whole period of simulation following the content of

EXPERIMENTparameters.txt

All the scripts are located in hydra at:

/share/tools/workflows/WRF4L/hydra

How to simulate

- Assuming that we have already defined the domains of simulation and that we have a suited

namelist.wpsandnamelist.inputWRF namelist files in a folder called$GEOGRID. We will gather all this information in the storage folder for the experiment (let's assume called $STORAGEdir) and we create there a folder for the information of the domains.

mkdir ${STORAGEdir}/domains

cp ${GEOGRID}/geo_em.d* ${STORAGEdir}/domains

cp ${GEOGRID}/namelist.wps ${STORAGEdir}/domains

cp ${GEOGRID}/namelist.input ${STORAGEdir}/domains

- We then move to the

${STORAGEdir}/domainsand create a template file for thenamelist.wps

cd ${STORAGEdir}/domains

cp namelist.wps namelist.wps_template

diff namelist.wps namelist.wps_template

4,6c4,6

< start_date = '2010-06-01_00:00:00', '2010-06-01_00:00:00'

< end_date = '2010-06-16_00:00:00', '2010-06-16_00:00:00'

< interval_seconds = 10800,

---

> start_date = 'iyr-imo-ida_iho:imi:ise', 'iyr-imo-ida_iho:imi:ise',

> end_date = 'eyr-emo-eda_eho:emi:ese', 'eyr-emo-eda_eho:emi:ese',

> interval_seconds = infreq

- In case we are going to use

nc2wpsto generate the intermediate files (ungrib.exeoutput directly generated from netCDF files), we need to generate a template for thenamelist.nc2wps. There are different options available in/share/tools/workflows/WRF4L/hydra. In this example we use the one prepared for ERA5 data as it is kept in Papa-Deimos:

cp /share/tools/workflows/WRF4L/hydra/namelist.nc2wps_template_ERA5 ${STORAGEdir}/domains/namelist.nc2wps_template

- Creation of a new folder from where launch the experiment [ExperimentName] (g.e. somewhere at

salidas/folder)

$ mkdir salidas/[ExperimentName] cd salidas/[ExperimentName]

- copy essential WRF4L files to this folder

cp /share/tools/workflows/WRF4L/hydra/EXPERIMENTparameters.txt ./ cp /share/tools/workflows/WRF4L/hydra/launch_experiment.pbs ./

- Edit the configuration/set-up of the simulation of the experiment (e.g. period of simulation, version of WPS and WRF to use, additional

namelist.inputparameters, multiple folders:storageHOME,runHOME, MPI configuration, ...)

$ vim EXPERIMENTparameters.txt

- Change the name of the user in the

launch_experiment.pbs

diff launch_experiment.pbs /share/tools/workflows/WRF4L/hydra/launch_experiment.pbs 11c11 < #PBS -M lluis.fita@cima.fcen.uba.ar --- > #PBS -M [user]@cima.fcen.uba.ar

- Launch the experiment

$ qsub launch_experiment.pbs

When it is running one would have multiple jobs, see for example for an experiment called 'MPIsimplest' and it simulation labelled 'urban' (finished `C', runnig `R', and hold `H'):

$ qstat -u $USER

hydra:

Req'd Req'd Elap

Job ID Username Queue Jobname SessID NDS TSK Memory Time S Time

-------------------- -------- -------- ---------------- ------ ----- --- ------ ----- - -----

72507.hydra experiment_relay lluis.fita 00:00:07 C larga

72508.hydra ...implest-urban lluis.fita 00:00:05 C larga

72509.hydra ...implest-urban lluis.fita 00:00:05 C larga

72510.hydra ...implest-urban lluis.fita 00:00:08 C larga

72511.hydra ...implest-urban lluis.fita 0 R larga

72512.hydra ...implest-urban lluis.fita 0 H larga

72513.hydra ...implest-urban lluis.fita 0 H larga

72514.hydra ...implest-urban lluis.fita 0 H larga

72515.hydra ...implest-urban lluis.fita 0 H larga

72516.hydra ...implest-urban lluis.fita 0 H larga

72518.hydra ...implest-urban lluis.fita 0 H larga

72519.hydra experiment_relay lluis.fita 0 H larga

In case of crash of the simulation, after fixing the issue, go to [expHOME]/[ExpName]/[SimName] and re-launch the experiment (after the first run the scratch is switched automatically to `false')

$ qsub launch_experiment.pbs

Checking the experiment

Once the experiment runs, one needs to look on (following name of the variables from EXPERIMENTparameters.txt

[runHOME]/[ExpName]/[SimName]: Will content the copies of the templatesnamelist.wps,namelist.inputand files:chunk_attemps.infwhich counts how many times a chunk has been attempted to be run (if it reached 4 times, theWRF4Lis stopped)chunk_nums.infwhich keeps track of the jobs and periods used for each step for a given chunk

[runHOME]/[ExpName]/[SimName]/ungrib: actual folder where the computing nodes run ungrib (either ungrib.exe or netcdf2wps)[runHOME]/[ExpName]/[SimName]/metgrid: actual folder where the computing nodes run metgrd (metgrid.exe)[runHOME]/[ExpName]/[SimName]/run: actual folder where the computing nodes run the model (real.exe and wrf.exe)[runHOME]/[ExpName]/[SimName]/run/outreal/: folder with the output of real.exe[storageHOME]/[ExpName]/[SimName]/outwrf/: folder with the output of wrf.exe

NOTE: ungrib, metgrid and real outputs are not kept in the storage folder

When something went wrong

If there has been any problem check the last chunk to try to understand what happens and where the problem comes from:

rsl.[error/out].[nnnn]: These are the files which content the standard output while running the model. One file for each process. If the problem was something related to model execution and it has been prepared for the error, a correct message must appear. (look first for the largest files...

$ ls -lrS rsl.error.*

run_wrf.log: These are the files which content the standard output of the model. Search for `segmentation faults' in form of (it might differ):

forrtl: error (63): output conversion error, unit -5, file Internal Formatted Write Image PC Routine Line Source wrf.exe 00000000032B736A Unknown Unknown Unknown wrf.exe 00000000032B5EE5 Unknown Unknown Unknown wrf.exe 0000000003265966 Unknown Unknown Unknown wrf.exe 0000000003226EB5 Unknown Unknown Unknown wrf.exe 0000000003226671 Unknown Unknown Unknown wrf.exe 000000000324BC3C Unknown Unknown Unknown wrf.exe 0000000003249C94 Unknown Unknown Unknown wrf.exe 00000000004184DC Unknown Unknown Unknown libc.so.6 000000319021ECDD Unknown Unknown Unknown wrf.exe 00000000004183D9 Unknown Unknown Unknown

- in

[expHOME]/[SimName], check the output of the PBS jobs. Which are called:experiment_relay.o[nnnn]: output of thelaunch_experiment.pbsprepare_wps_[ExpName]-[SimName].o[nnnn]: output of thewrf4l_prepare_WPS.pbsprepare_wrf_[ExpName]-[SimName].o[nnnn]: output of thewrf4l_prepare_WRF.pbsprep_ncwps_[ExpName]-[SimName].o[nnnn]: output of thewrf4l_prepare_nc2wps.pbsprep_ungrib_[ExpName]-[SimName].o[nnnn]: output of thewrf4l_prepare_ungrib.pbsprep-nxt_ncwps_[ExpName]-[SimName].o[nnnn]: output of thewrf4l_prepare_nc2wps.pbsfor the following chunkprep-nxt_ungrib_[ExpName]-[SimName].o[nnnn]: output of thewrf4l_prepare_ungrib.pbsfor the following chunkfinish_sim_[ExpName]-[SimName].o[nnnn]: output of thewrf4l_finishing_simulation.pbs

- in

[runHOME]/[SimName], check the output of the PBS jobs. Which are called:ungrib/nc2wps_[ExpName]-[SimName].o[nnnn]: output of thewrf4l_launch_nc2wps.pbsungrib/ungrib_[ExpName]-[SimName].o[nnnn]: output of thewrf4l_launch_ungrib.pbsmetgrid/metgrid_[ExpName]-[SimName].o[nnnn]: output of thewrf4l_launch_metgrid.pbsrun/wrf_[ExpName]-[SimName].o[nnnn]: output of thewrf4l_launch_real.pbsrun/wrf_[ExpName]-[SimName].o[nnnn]: output of thewrf4l_launch_wrf.pbs

All the logs and job outputs are kept in ${storageHOME}/[ExpName]/[SimName]/joblogs with a compressed file for ecah chunk and attemp as ${chunk_period}-${Nattempts}_joblogs.tar

EXPERIMENTSparameters.txt

This ASCII file configures all the simulation. It assumes:

- Required files, forcings, storage, compiled version of the code might be at different machines.

- There is a folder with a given template version of the

namelist.inputwhich will be used and changed accordingly to the requirement of the experiments

Location of the WRF4L main folder (example for hydra)

# Home of the WRF4L wrf4lHOME=/share/tools/workflows/WRF4L

Name of the machine where the experiment is running (NOTE: a folder with specific work-flow must exist as $wrf4lHOME/${HPC})

# Machine specific work-flow files for $HPC (a folder with specific work-flow must exist as $wrf4lHOME/${HPC})

HPC=hydra

Name of the compiler used to compile the model (NOTE: a file called $wrf4lHOME/arch/${HPC}_${compiler}.env must exist)

# Compilation (a file called $wrf4lHOME/arch/${HPC}_${compiler}.env must exist)

compiler=intel

Name of the experiment

# Experiment name ExpName = WRFsensSFC

Name of the simulation. Here is understood that a given experiment could have the model configured with different set-ups (here identified with a different name of simulation)

# Simulation name SimName = control

Should this simulation be run from the beginning or not. If it is set to `true', it will remove all the pre-existing content of the folder [ExpName]/[SimName] in the running and in the storage spaces. Be careful. In case of `false' simulation will continue from the last successful ran chunk (checking the restart files).

# Start from the beginning (keeping folder structure) scratch = false

Period of the simulation of the simulation (In this example from 1958 Jan 1st to 2015 Dec 31)

# Experiment starting date exp_start_date = 19790101000000 # Experiment ending date exp_end_date = 20150101000000

Length of the chunks (do not make chunks larger than 1-month!!)

# Chunk Length [N]@[unit] # [unit]=[year, month, week, day, hour, minute, second] chunk_length = 1@month

Selection of the machines and users to each machine where the different requirement files are located and the output should be placed.

- NOTE: this will only work if one set-up the

.sshpublic/private keys in each involved USER/HOST. - NOTE 2: All the forcings, compiled code, ... are already at

hydraat the common space calledshare - NOTE 3: From the computing nodes, one can not access to the

/sharefolder and to any of the CIMA's storage machines: skogul, freyja, ... For that reason, one need to use these system of[USER]@[HOST]accounts.*.pbsscripts uses a series of wrappers of the standard functions:cp, ln, ls, mv, ....which manage them `from' and `to' different pairs of[USER]@[HOST]. NOTE: This will only work if the public/private ssh key pairs have been set-up (see more details at llaves_ssh)

# Hosts # list of different hosts and specific user # [USER]@[HOST] # NOTE: this will only work if public keys have been set-up ## # Host with compiled code, namelist templates codeHOST=lluis.fita@hydra # forcing Host with forcings (atmospherics and morphologicals) forcingHOST=lluis.fita@hydra # output Host with storage of output (including restarts) outHOST=lluis.fita@hydra

Templates of the configuration of WRF: namelist.wps, namelist.input files. NOTE: they will be changed according to the content of EXPERIMENTparameters.txt like period of the chunk, atmospheric forcing, differences of the set-up, ... (located in the [codeHOST]

# Folder with the `namelist.wps', `namelist.input' and `geo_em.d[nn].nc' of the experiment domainHOME = /home/lluis.fita/salidas/EXPS/estudios/dominmios/SA50k

Folder where the workflow will be launched (on top of that there will be one more [SimName])

# Experiment folder expHOME=/home/lluis.fita/salidas/estudios/MPIsimplest/exp

Folder where the WRF model will run in the computing nodes (on top of that there will be two folders [ExpName]/[SimName]). WRF will run at the folder [SimName]/run

# Running folder runHOME = /home/lluis.fita/salidas/RUNNING

Folder with the compiled version of the WPS (located at [codeHOST])

# Folder with the compiled source of WPS wpsHOME = /opt/wrf/WRF-4.5/intel/2021.4.0/dmpar/WPS

Folder with the compiled version of the WRF (located at [codeHOST])

# Folder with the compiled source of WRF wrfHOME = /share/WRF/WRFV3.9.1/ifort/dmpar/WRFV3

Folder to storage all the output of the model (history files, restarts and compressed file with content of the configuration and the standard output of the given run). The content of the folder will be organized by chunks (located at [storageHOST]) with two folders called [ExpName]/[SimName].

# Storage folder of the output storageHOME = /home/lluis.fita/salidas/EXPS

Wether modules should be load (not used for hydra)

# Modules to load ('None' for any)

modulesLOAD = None

Names of the files used to check that the chunk has properly ran

# Model reference output names (to be used as checking file names) nameLISTfile = namelist.input # namelist nameRSTfile = wrfrst_d01_ # restart file nameOUTfile = wfrout_d01_ # output file

Extensions of the files with the configuration of WRF (to be retrieved from codeHOST and domainHOME)

# Extensions of the files with the configuration of the model configEXTS = wps:input

To continue from a previous chunk one needs to use the `restart' files. But they need to be renamed, because otherwise they will be re-written. Here one specifies the original name of the file [origFile] and the name to be used to avoid the re-writting [destFile]. It uses a complex bash script which even can deal with the change of dates according to the period of the chunk (':' list of [origFile]@[destFile]). They will located at the [storageHOST]

# restart file names # ':' list of [tmplrstfilen|[NNNNN1]?[val1]#[...[NNNNNn]?[valn]]@[tmpllinkname]|[NNNNN1]?[val1]#[...[NNNNNn]?[valn]] # [tmplrstfilen]: template name of the restart file (if necessary with [NNNNN] variables to be substituted) # [NNNNN]: section of the file name to be automatically substituted # `[YYYY]': year in 4 digits # `[YY]': year in 2 digits # `[MM]': month in 2 digits # `[DD]': day in 2 digits # `[HH]': hour in 2 digits # `[SS]': second in 2 digits # `[JJJ]': julian day in 3 digits # [val]: value to use (which is systematically defined in `run_OR.pbs') # `%Y%': year in 4 digits # `%y%': year in 2 digits # `%m%': month in 2 digits # `%d%': day in 2 digits # `%h%': hour in 2 digits # `%s%': second in 2 digits # `%j%': julian day in 3 digits # [tmpllinkname]: template name of the link of the restart file (if necessary with [NNNNN] variables to be substituted) rstFILES=wrfrst_d01_[YYYY]-[MM]-[DD]_[HH]:[MI]:[SS]|YYYY?%Y#MM?%m#DD?%d#HH?%H#MI?%M#SS?%S@wrfrst_d01_[YYYY]-[MM]-[DD]_[HH]:[MI]:[SS]|YYYY?%Y#MM?%m#DD?%d#HH?%H#MI?%M#SS?%S

Folder with the input data (located at [forcingHOST]).

# Folder with the input morphological forcing data indataHOME = /share/DATA/re-analysis/ERA-Interim

Format of the input data and name of files

# Data format (grib, nc)

indataFMT= grib

# For `grib' format

# Head and tail of indata files names.

# Assuming ${indataFheader}*[YYYY][MM]*${indataFtail}.[grib/nc]

indataFheader=ERAI_

indataFtail=

In case of netCDF input data, there is a bash script which transforms the data to grib, to be used later by ungrib

Variable table to use in ungrib

# Type of Vtable for ungrib as Vtable.[VtableType] VtableType=ERA-interim.pl

Folder with the atmospheric forcing data (located at [forcingHOST]).

# For `nc' format # Folder which contents the atmospheric data to generate the initial state iniatmosHOME = ./ # Type of atmospheric data to generate the initial state # `ECMWFstd': ECMWF 'standard' way ERAI_[pl/sfc][YYYY][MM]_[var1]-[var2].grib # `ERAI-IPSL': ECMWF ERA-INTERIM stored in the common IPSL way (.../4xdaily/[AN\_PL/AN\_SF]) iniatmosTYPE = 'ECMWFstd'

Here on can change values on the template namelist.input. It will change the values of the provided parameters with a new value. If the given parameter is not in the template of the namelist.input it will be automatically added.

## Namelist changes nlparameters = ra_sw_physics;4,ra_lw_physics;4,time_step;180

Name of WRF's executable (to be localized at [orHOME] folder from [codeHOST])

# Name of the exectuable nameEXEC=wrf.exe

':' separated list of netCDF file names from WRF's output which do not need to be kept

# netCDF Files which will not be kept anywhere NokeptfileNAMES=''

':' separated list of headers of netCDF file names from WRF's output which need to be kept

# Headers of netCDF files need to be kept HkeptfileNAMES=wrfout_d:wrfxtrm_d:wrfpress_d:wrfcdx_d:wrfhfcdx_d

':' separated list of headers of restarts netCDF file names from WRF's output which need to be kept

# Headers of netCDF restart files need to be kept HrstfileNAMES=wrfrst_d

Parallel configuration of the run.

# WRF parallel run configuration

## Number of nodes

Nnodes = 1

## Number of mpi procs

Nmpiprocs = 16

## Number of shared memory threads ('None' for no openMP threads)

Nopenthreads = None

## Memory size of shared memory threads

SIZEopenthreads = 200M

## Memory for PBS jobs

MEMjobs = 30gb

Generic definitions

## Generic errormsg=ERROR -- error -- ERROR -- error warnmsg=WARNING -- warning -- WARNING -- warning