WRF4L

(Rellenando la página de WRF4G II) |

(→How to simulate) |

||

| (No se muestran 17 ediciones intermedias realizadas por 2 usuarios) | |||

| Línea 3: | Línea 3: | ||

Lluís developed a less powerful one which is here described |

Lluís developed a less powerful one which is here described |

||

| − | WRF work-flow management is done via 4 scripts (these are the specifics for hydra [CIMA cluster]): |

+ | WRF work-flow management is done via 5 scripts (these are the specifics for hydra [CIMA cluster]): |

* <code>EXPERIMENTparameters.txt</code>: General ASCII file which configures the experiment and chain of simulations (chunks). This is the unique file to modify |

* <code>EXPERIMENTparameters.txt</code>: General ASCII file which configures the experiment and chain of simulations (chunks). This is the unique file to modify |

||

| − | * <code>run_experiments.pbs</code>: PBS-queue job which prepares the experiment of the environment |

+ | * <code>wrf4l_experiment.pbs</code>: PBS-queue job which prepares the experiment of the environment |

| − | * <code>run_WPS.pbs</code>: PBS-queue job which launch the WPS section of the model: <code>ungrib.exe</code>, <code>metgrid.exe</code>, <code>real.exe</code> |

+ | * <code>wrf4l_WPS.pbs</code>: PBS-queue job which launch the WPS section of the model: <code>ungrib.exe</code>, <code>metgrid.exe</code>, <code>real.exe</code> |

| + | * <code>wrf4l_WRF.pbs</code>: PBS-queue job which launch the <code>wrf.exe</code> |

||

| + | <!---* <code>launch_pbs.bash</code>: Necessary shell script to launch jobs which use more than one node in CIMA's <code>hydra</code> cluster--> |

||

* There is a folder called <code>components</code> with shell and python scripts necessary for the work-flow management |

* There is a folder called <code>components</code> with shell and python scripts necessary for the work-flow management |

||

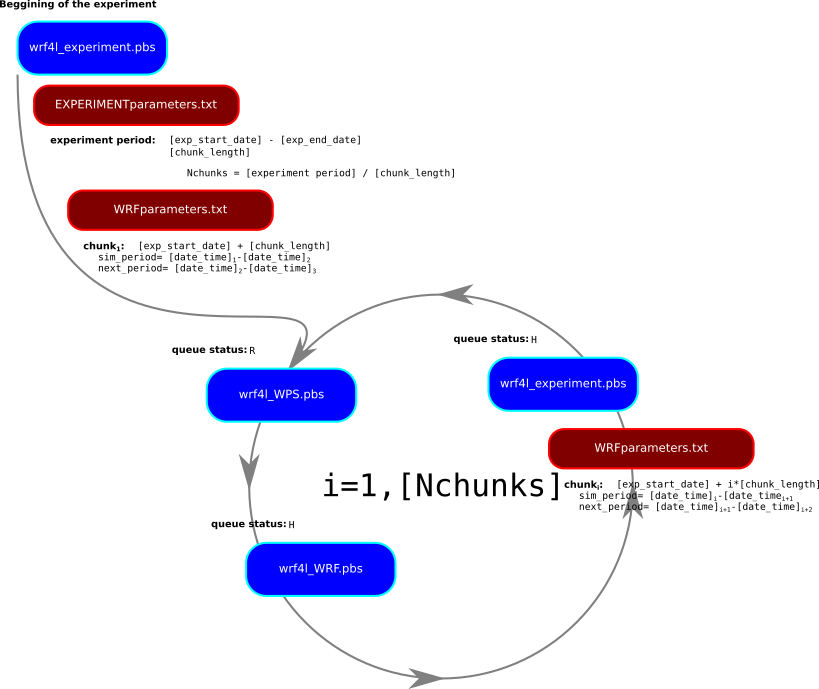

An experiment which contains a period of simulation is divided by '''chunks''' small pieces of times which are manageable by the model. The work-flow follows these steps using <code>run_experiments.pbs</code>: |

An experiment which contains a period of simulation is divided by '''chunks''' small pieces of times which are manageable by the model. The work-flow follows these steps using <code>run_experiments.pbs</code>: |

||

# Copy and link all the required files for a given '''chunk''' of the whole period of simulation following the content of <code>EXPERIMENTparameters.txt</code> |

# Copy and link all the required files for a given '''chunk''' of the whole period of simulation following the content of <code>EXPERIMENTparameters.txt</code> |

||

| − | # Launches <code>run_WPS.pbs</code> which will produce the necessary files for the period of the given '''chunk''' |

+ | # Launches <code>wrf4l_WPS.pbs</code> which will produce the necessary files for the period of the given '''chunk''' |

| − | # Launches <code>run_WRF.pbs</code> which will simulated the period of the given '''chunk''' (which waits until the end of <code>run_WPS.pbs</code>) |

+ | # Launches <code>wrf4l_WRF.pbs</code> which will simulated the period of the given '''chunk''' (which waits until the end of <code>run_WPS.pbs</code>) |

| − | # Launches the next <code>run_experiments.pbs</code> (which waits until the end of <code>run_WRF.pbs</code>) |

+ | # Launches the next <code>wrf4l_experiments.pbs</code> (which waits until the end of <code>wrf4l_WRF.pbs</code>) |

| + | |||

| + | [[File:WRF4L_resize.png]] |

||

All the scripts are located in <code>hydra</code> at: |

All the scripts are located in <code>hydra</code> at: |

||

<pre> |

<pre> |

||

| − | /share/tools/work-flows/WRF4L/hydra |

+ | /share/tools/workflows/WRF4L/hydra |

</pre> |

</pre> |

||

| Línea 28: | Línea 28: | ||

# copy the WRF4L files to this folder |

# copy the WRF4L files to this folder |

||

<pre> |

<pre> |

||

| − | $ cp /share/tools/work-flows/WRF4L/hydra/EXPERIMENTparameters.txt ./ |

+ | cp /share/tools/workflows/WRF4L/hydra/EXPERIMENTparameters.txt ./ |

| − | $ cp /share/tools/work-flows/WRF4L/hydra/run_experiment.pbs ./ |

+ | cp /share/tools/workflows/WRF4L/hydra/wrf4l_experiment.pbs ./ |

| − | $ cp /share/tools/work-flows/WRF4L/hydra/run_WPS.pbs ./ |

+ | cp /share/tools/workflows/WRF4L/hydra/wrf4l_WPS.pbs ./ |

| − | $ cp /share/tools/work-flows/WRF4L/hydra/run_WRF.pbs ./ |

+ | cp /share/tools/workflows/WRF4L/hydra/wrf4l_WRF.pbs ./ |

</pre> |

</pre> |

||

# Edit the configuration/set-up of the simulation of the experiment |

# Edit the configuration/set-up of the simulation of the experiment |

||

| Línea 39: | Línea 39: | ||

# Launch the simulation of the experiment |

# Launch the simulation of the experiment |

||

<pre> |

<pre> |

||

| − | $ qsub run_experiment.pbs |

+ | $ qsub wrf4l_experiment.pbs |

</pre> |

</pre> |

||

| − | When it is running one would have (runnig ORCHIDEE job <code>or_[SimName]</code> `R', and <code>exp_[SimName]</code> in hold `H'): |

+ | When it is running one would have (runnig WRF job <code>wrf_[SimName]</code> `R', and <code>exp_[SimName]</code> in hold `H'): |

<pre> |

<pre> |

||

$ qstat -u $USER |

$ qstat -u $USER |

||

| Línea 56: | Línea 56: | ||

In case of crash of the simulation, after fixing the issue, go to <code>[runHOME]/[ExpName]/[SimName]</code> and re-launch the experiment (after the first run the <code>scratch</code> is switched automatically to `false') |

In case of crash of the simulation, after fixing the issue, go to <code>[runHOME]/[ExpName]/[SimName]</code> and re-launch the experiment (after the first run the <code>scratch</code> is switched automatically to `false') |

||

<pre> |

<pre> |

||

| − | $ qsub run_experiment.pbs |

+ | $ qsub wrf4l_experiment.pbs |

</pre> |

</pre> |

||

| Línea 62: | Línea 62: | ||

Once the experiment runs, one needs to look on (following name of the variables from <code>EXPERIMENTparameters.txt</code> |

Once the experiment runs, one needs to look on (following name of the variables from <code>EXPERIMENTparameters.txt</code> |

||

* <code>[runHOME]/[ExpName]/[SimName]</code>: Will content the copies of the templates <code>namelist.wps</code>, <code>namelist.input</code> and a file <code>chunk_attemps.inf</code> which counts how many times a '''chunk''' has been attempted to be run (if it reached 4 times, the <code>WRF4L</code> is stopped) |

* <code>[runHOME]/[ExpName]/[SimName]</code>: Will content the copies of the templates <code>namelist.wps</code>, <code>namelist.input</code> and a file <code>chunk_attemps.inf</code> which counts how many times a '''chunk''' has been attempted to be run (if it reached 4 times, the <code>WRF4L</code> is stopped) |

||

| − | * <code>[runHOME]/[ExpName]/[SimName]/run</code>: actual folder where the computing nodes run the model. In a folder called <code>orout</code> there is a folder for each '''chunk''' with the standard output of the model |

+ | * <code>[runHOME]/[ExpName]/[SimName]/run</code>: actual folder where the computing nodes run the model. In a folder called <code>wrfout</code> there is a folder for each '''chunk''' with the standard output of the model |

| − | * <code>[runHOME]/[ExpName]/[SimName]/run/orout/[YYYYi][MMi][DDi][HHi][MIi][SSi]-[YYYYf][MMf][DDf][HHf][MIf][SSf]</code>: folder with the standard output and all the required files to run a given '''chunk'''. The content of all this folder is compressed and kept in <code>[storageHOME]/[ExpName]/[SimName]/config_[YYYYi][MMi][DDi][HHi][MIi][SSi]-[YYYYf][MMf][DDf][HHf][MIf][SSf].tar.gz</code> |

+ | * <code>[runHOME]/[ExpName]/[SimName]/run/outwrf/[YYYYi][MMi][DDi][HHi][MIi][SSi]-[YYYYf][MMf][DDf][HHf][MIf][SSf]</code>: folder with the standard output and all the required files to run a given '''chunk'''. The content of all this folder is compressed and kept in <code>[storageHOME]/[ExpName]/[SimName]/config_[YYYYi][MMi][DDi][HHi][MIi][SSi]-[YYYYf][MMf][DDf][HHf][MIf][SSf].tar.gz</code> |

* <code>[storageHOME]/[ExpName]/[SimName]</code> (in [storageHOST]): output of the already ran '''chunks''' as <code>[YYYYi][MMi][DDi][HHi][MIi][SSi]-[YYYYf][MMf][DDf][HHf][MIf][SSf]</code> for a chunk from <code>[YYYYi]/[MMi]/[DDi] [HHi]:[MIi]:[SSi]</code> to <code>[YYYYf]/[MMf]/[DDf] [HHf]:[MIf]:[SSf]</code> |

* <code>[storageHOME]/[ExpName]/[SimName]</code> (in [storageHOST]): output of the already ran '''chunks''' as <code>[YYYYi][MMi][DDi][HHi][MIi][SSi]-[YYYYf][MMf][DDf][HHf][MIf][SSf]</code> for a chunk from <code>[YYYYi]/[MMi]/[DDi] [HHi]:[MIi]:[SSi]</code> to <code>[YYYYf]/[MMf]/[DDf] [HHf]:[MIf]:[SSf]</code> |

||

=== When something went wrong === |

=== When something went wrong === |

||

| − | If there has been any problem check the last chunk (in orout/[PERIODchunk]) to try to understand what happens and where the problem comes from: |

+ | If there has been any problem check the last chunk (in outwrf/[PERIODchunk]) to try to understand what happens and where the problem comes from: |

| − | * <code>out_orchidee_[nnnn]</code>: These are the files which content the standard output while running the model. One file for each process. If the problem was something related to model execution and it has been prepared for the error, a correct message must appear. (look first for the largest files... |

+ | * <code>rsl.[error/out].[nnnn]</code>: These are the files which content the standard output while running the model. One file for each process. If the problem was something related to model execution and it has been prepared for the error, a correct message must appear. (look first for the largest files... |

<pre> |

<pre> |

||

| − | $ ls -lrS out_orchidee_* |

+ | $ ls -lrS rsl.error.* |

</pre> |

</pre> |

||

| − | * <code>run_or.log</code>: These are the files which content the standard output of the model. Search for `segmentation faults' in form of (it might differ): |

+ | * <code>run_wrf.log</code>: These are the files which content the standard output of the model. Search for `segmentation faults' in form of (it might differ): |

<pre>forrtl: error (63): output conversion error, unit -5, file Internal Formatted Write |

<pre>forrtl: error (63): output conversion error, unit -5, file Internal Formatted Write |

||

Image PC Routine Line Source |

Image PC Routine Line Source |

||

| − | orchidee_ol 00000000032B736A Unknown Unknown Unknown |

+ | wrf.exe 00000000032B736A Unknown Unknown Unknown |

| − | orchidee_ol 00000000032B5EE5 Unknown Unknown Unknown |

+ | wrf.exe 00000000032B5EE5 Unknown Unknown Unknown |

| − | orchidee_ol 0000000003265966 Unknown Unknown Unknown |

+ | wrf.exe 0000000003265966 Unknown Unknown Unknown |

| − | orchidee_ol 0000000003226EB5 Unknown Unknown Unknown |

+ | wrf.exe 0000000003226EB5 Unknown Unknown Unknown |

| − | orchidee_ol 0000000003226671 Unknown Unknown Unknown |

+ | wrf.exe 0000000003226671 Unknown Unknown Unknown |

| − | orchidee_ol 000000000324BC3C Unknown Unknown Unknown |

+ | wrf.exe 000000000324BC3C Unknown Unknown Unknown |

| − | orchidee_ol 0000000003249C94 Unknown Unknown Unknown |

+ | wrf.exe 0000000003249C94 Unknown Unknown Unknown |

| − | orchidee_ol 00000000021B75FB routing_mp_routin 3212 routing.f90 |

+ | wrf.exe 00000000004184DC Unknown Unknown Unknown |

| − | orchidee_ol 000000000217970D routing_mp_routin 2690 routing.f90 |

||

| − | orchidee_ol 00000000020915AE routing_mp_routin 475 routing.f90 |

||

| − | orchidee_ol 0000000000988860 sechiba_mp_sechib 491 sechiba.f90 |

||

| − | orchidee_ol 00000000005E5A45 intersurf_mp_inte 374 intersurf.f90 |

||

| − | orchidee_ol 00000000004DFB82 MAIN__ 1250 dim2_driver.f90 |

||

| − | orchidee_ol 00000000004184DC Unknown Unknown Unknown |

||

libc.so.6 000000319021ECDD Unknown Unknown Unknown |

libc.so.6 000000319021ECDD Unknown Unknown Unknown |

||

| − | orchidee_ol 00000000004183D9 Unknown Unknown Unknown |

+ | wrf.exe 00000000004183D9 Unknown Unknown Unknown |

</pre> |

</pre> |

||

* on <code>[runHOME]/[ExpName]/[SimName]</code>, check the output of the PBS jobs. Which are called: |

* on <code>[runHOME]/[ExpName]/[SimName]</code>, check the output of the PBS jobs. Which are called: |

||

| − | ** <code>exp_oF-[SimName].o[nnnn]</code>: output of the <code>run_experiment.pbs</code> |

+ | ** <code>exp-[SimName].o[nnnn]</code>: output of the <code>wrf4l_experiment.pbs</code> |

| − | ** <code>or_oF-[SimName].o[nnnn]</code>: output of the <code>run_OR.pbs</code> |

+ | ** <code>wps-[SimName].o[nnnn]</code>: output of the <code>wrf4l_WPS.pbs</code> |

| − | * Check <code>[runHOME]/[ExpName]/[SimName]/run/used_run.def</code> which holds all the parameters (even the default ones) used in the simulation |

+ | ** <code>wrf-[SimName].o[nnnn]</code>: output of the <code>wrf4l_WRF.pbs</code> |

| + | * Check <code>[runHOME]/[ExpName]/[SimName]/run/namelist.output</code> which holds all the parameters (even the default ones) used in the simulation |

||

== EXPERIMENTSparameters.txt == |

== EXPERIMENTSparameters.txt == |

||

This ASCII file configures all the simulation. It assumes: |

This ASCII file configures all the simulation. It assumes: |

||

* Required files, forcings, storage, compiled version of the code might be at different machines. |

* Required files, forcings, storage, compiled version of the code might be at different machines. |

||

| − | * There is a folder with a given template version of the <code>run.def</code> which will be used and changed accordingly to the requirement of the experiments |

+ | * There is a folder with a given template version of the <code>namelist.input</code> which will be used and changed accordingly to the requirement of the experiments |

| + | |||

| + | Location of the WRF4L main folder (example for <code>hydra</code>) |

||

| + | <pre> |

||

| + | # Home of the WRF4L |

||

| + | wrf4lHOME=/share/workflows/WRF4L |

||

| + | </pre> |

||

| + | |||

| + | Name of the machine where the experiment is running ('''NOTE:''' a folder with specific work-flow must exist as <code>$wrf4lHOME/${HPC}</code>) |

||

| + | <pre> |

||

| + | # Machine specific work-flow files for $HPC (a folder with specific work-flow must exist as $wrf4lHOME/${HPC}) |

||

| + | HPC=hydra |

||

| + | </pre> |

||

| + | |||

| + | Name of the compiler used to compile the model ('''NOTE:''' a file called <code>$wrf4lHOME/arch/${HPC}_${compiler}.env</code> must exist) |

||

| + | <pre> |

||

| + | # Compilation (a file called $wrf4lHOME/arch/${HPC}_${compiler}.env must exist) |

||

| + | compiler=intel |

||

| + | </pre> |

||

Name of the experiment |

Name of the experiment |

||

<pre> |

<pre> |

||

# Experiment name |

# Experiment name |

||

| − | ExpName = DiPolo |

+ | ExpName = WRFsensSFC |

</pre> |

</pre> |

||

| Línea 105: | Línea 105: | ||

<pre> |

<pre> |

||

# Simulation name |

# Simulation name |

||

| − | SimName = OKstomate_CRUNCEP_spinup |

+ | SimName = control |

</pre> |

</pre> |

||

| Línea 111: | Línea 111: | ||

<pre> |

<pre> |

||

# python binary |

# python binary |

||

| − | pyBIN=/home/lluis.fita/bin/anaconda2/bin/python |

+ | pyBIN=/home/lluis.fita/bin/anaconda2/bin/python2.7 |

</pre> |

</pre> |

||

| Línea 123: | Línea 123: | ||

<pre> |

<pre> |

||

# Experiment starting date |

# Experiment starting date |

||

| − | exp_start_date = 19580101000000 |

+ | exp_start_date = 19790101000000 |

# Experiment ending date |

# Experiment ending date |

||

exp_end_date = 20150101000000 |

exp_end_date = 20150101000000 |

||

</pre> |

</pre> |

||

| − | Length of the chunks (here and in all ORCHIDEE runs maximum to 1-year!!) |

+ | Length of the chunks (do not make chunks larger than 1-month!!) |

<pre> |

<pre> |

||

# Chunk Length [N]@[unit] |

# Chunk Length [N]@[unit] |

||

# [unit]=[year, month, week, day, hour, minute, second] |

# [unit]=[year, month, week, day, hour, minute, second] |

||

| − | chunk_length = 1@year |

+ | chunk_length = 1@month |

</pre> |

</pre> |

||

| Línea 138: | Línea 138: | ||

* '''NOTE:''' this will only work if one set-up the <code>.ssh</code> public/private keys in each involved USER/HOST. |

* '''NOTE:''' this will only work if one set-up the <code>.ssh</code> public/private keys in each involved USER/HOST. |

||

* '''NOTE 2:''' All the forcings, compiled code, ... are already at <code>hydra</code> at the common space called <code>share</code> |

* '''NOTE 2:''' All the forcings, compiled code, ... are already at <code>hydra</code> at the common space called <code>share</code> |

||

| − | * '''NOTE 3:''' From the computing nodes, one can not access to the <code>/share</code> folder and to any of the CIMA's storage machines: skogul, freyja, ... For that reason, one need to use these system of <code>[USER]@[HOST]</code> accounts. <code>*.pbs</code> scripts uses a series of wrappers of the standard functions: <code>cp, ln, ls, mv, ....</code> which manage them `from' and `to' different pairs of <code>[USER]@[HOST]</code> |

+ | * '''NOTE 3:''' From the computing nodes, one can not access to the <code>/share</code> folder and to any of the CIMA's storage machines: skogul, freyja, ... For that reason, one need to use these system of <code>[USER]@[HOST]</code> accounts. <code>*.pbs</code> scripts uses a series of wrappers of the standard functions: <code>cp, ln, ls, mv, ....</code> which manage them `from' and `to' different pairs of <code>[USER]@[HOST]</code>. '''NOTE:''' This will only work if the public/private ssh key pairs have been set-up (see more details at [[llaves_ssh]]) |

<pre> |

<pre> |

||

# Hosts |

# Hosts |

||

| Línea 153: | Línea 153: | ||

</pre> |

</pre> |

||

| − | Templates of the configuration of ORCHIDEE: <code>run.def</code>, <code>*.xml</code> files. '''NOTE:''' only <code>run.def</code> will be changed according to the content of <code>EXPERIMENTparameters.txt</code> like period of the '''chunk''', atmospheric forcing, differences of the set-up, ... (located in the <code>[codeHOST]</code> |

+ | Templates of the configuration of WRF: <code>namelist.wps</code>, <code>namelist.input</code> files. '''NOTE:''' they will be changed according to the content of <code>EXPERIMENTparameters.txt</code> like period of the '''chunk''', atmospheric forcing, differences of the set-up, ... (located in the <code>[codeHOST]</code> |

<pre> |

<pre> |

||

| − | # Folder with the `run.def' and `xml' of the experiment |

+ | # Folder with the `namelist.wps', `namelist.input' and `geo_em.d[nn].nc' of the experiment |

| − | domainHOME = /home/lluis.fita/salidas/estudios/dominios/DiPolo/daily |

+ | domainHOME = /home/lluis.fita/salidas/estudios/dominmios/SA50k |

</pre> |

</pre> |

||

| − | Folder where the ORCHIDEE model will run in the computing nodes (on top of that there will be two more folders [ExpName]/[SimName]). ORCHIDEE will run at the folder [ExpName]/[SimName]/run |

+ | Folder where the WRF model will run in the computing nodes (on top of that there will be two more folders [ExpName]/[SimName]). WRF will run at the folder [ExpName]/[SimName]/run |

<pre> |

<pre> |

||

# Running folder |

# Running folder |

||

| − | runHOME = /home/lluis.fita/estudios/DiPolo/sims |

+ | runHOME = /home/lluis.fita/estudios/WRFsensSFC/sims |

</pre> |

</pre> |

||

| − | Folder with the compiled version of the model (located at <code>[codeHOST]</code>) |

+ | Folder with the compiled version of the WPS (located at <code>[codeHOST]</code>) |

<pre> |

<pre> |

||

| − | # Folder with the compiled source of ORCHIDEE |

+ | # Folder with the compiled source of WPS |

| − | orHOME = /share/modipsl/bin/ |

+ | wpsHOME = /share/WRF/WRFV3.9.1/ifort/dmpar/WPS |

| + | </pre> |

||

| + | |||

| + | Folder with the compiled version of the WRF (located at <code>[codeHOST]</code>) |

||

| + | <pre> |

||

| + | # Folder with the compiled source of WRF |

||

| + | wrfHOME = /share/WRF/WRFV3.9.1/ifort/dmpar/WRFV3 |

||

</pre> |

</pre> |

||

| Línea 174: | Línea 174: | ||

<pre> |

<pre> |

||

# Storage folder of the output |

# Storage folder of the output |

||

| − | storageHOME = /home/lluis.fita/salidas/estudios/DiPolo/sims/output |

+ | storageHOME = /home/lluis.fita/salidas/estudios/WRFsensSFC/sims/output |

</pre> |

</pre> |

||

| Línea 181: | Línea 181: | ||

# Modules to load ('None' for any) |

# Modules to load ('None' for any) |

||

modulesLOAD = None |

modulesLOAD = None |

||

| − | </pre> |

||

| − | |||

| − | Which kind of simulation will be run (at this time only prepared for 'offline') |

||

| − | <pre> |

||

| − | # Simulation kind |

||

| − | # 'offline': Realistic off-line run, with initial conditions at each change of year |

||

| − | # 'periodic': Realistic off-line run, with the same initial conditions for each year |

||

| − | kindSIM = offline |

||

</pre> |

</pre> |

||

| Línea 194: | Línea 186: | ||

<pre> |

<pre> |

||

# Model reference output names (to be used as checking file names) |

# Model reference output names (to be used as checking file names) |

||

| − | nameLISTfile = run.def # namelist |

+ | nameLISTfile = namelist.input # namelist |

| − | nameRSTfile = sechiba_rest_out.nc # restart file |

+ | nameRSTfile = wrfrst_d01_ # restart file |

| − | nameOUTfile = sechiba_history.nc # output file |

+ | nameOUTfile = wfrout_d01_ # output file |

</pre> |

</pre> |

||

| − | Extensions of the files which content the configuration of the model |

+ | Extensions of the files with the configuration of WRF (to be retrieved from <code>codeHOST</code> and <code>domainHOME</code>) |

<pre> |

<pre> |

||

# Extensions of the files with the configuration of the model |

# Extensions of the files with the configuration of the model |

||

| − | configEXTS = def:xml |

+ | configEXTS = wps:input |

</pre> |

</pre> |

||

| Línea 227: | Línea 219: | ||

# `%j%': julian day in 3 digits |

# `%j%': julian day in 3 digits |

||

# [tmpllinkname]: template name of the link of the restart file (if necessary with [NNNNN] variables to be substituted) |

# [tmpllinkname]: template name of the link of the restart file (if necessary with [NNNNN] variables to be substituted) |

||

| − | rstFILES=sechiba_rest_out.nc@sechiba_rst.nc:stomate_rest_out.nc@stomate_rst.nc |

+ | rstFILES=wrfrst_d01_[YYYY]-[MM]-[DD]_[HH]:[MI]:[SS]|YYYY?%Y#MM?%m#DD?%d#HH?%H#MI?%M#SS?%S@wrfrst_d01_[YYYY]-[MM]-[DD]_[HH]:[MI]:[SS]|YYYY?%Y#MM?%m#DD?%d#HH?%H#MI?%M#SS?%S |

</pre> |

</pre> |

||

| − | Folder with the forcing data (located at <code>[forcingHOST]</code>). |

+ | Folder with the input data (located at <code>[forcingHOST]</code>). |

<pre> |

<pre> |

||

# Folder with the input morphological forcing data |

# Folder with the input morphological forcing data |

||

| − | indataHOME = /share/ORCHIDEE/data/IGCM/SRF |

+ | indataHOME = /share/DATA/re-analysis/ERA-Interim |

</pre> |

</pre> |

||

| − | Files to be used as morphological forcings (It uses the same complex bash script as in the restarts) |

+ | Format of the input data and name of files |

<pre> |

<pre> |

||

| − | # ':' separated list of [morphfilen]|[NNNNN1]?[val1]#[...[NNNNNn]?[valn]]@[tpmllinkname]|[NNNNN1]?[val1]#[...[NNNNNn]?[valn]] |

+ | # Data format (grib, nc) |

| − | # [morphfilen]: morphological forcing file (relative to ${indataHOME}) (if necessary with [NNNNN] variables to be substituted) |

+ | indataFMT= grib |

| − | # [tmpllinkname]: template name of the link of the restart file (if necessary with [NNNNN] variables to be substituted) |

+ | # For `grib' format |

| − | indataFILES = albedo/alb_bg_modisopt_2D_ESA_v2.nc@alb_bg_modisopt_2D_ESA.nc:cartepente2d_15min.nc@cartepente2d_15min.nc:carteveg5km.nc@carteveg5km.nc:floodplains.nc@floodplains.nc:lai2D.nc@lai2D.nc:PFTMAPS/CMIP6/ESA-LUH2/historical/v1.2/withNoBio/13PFTmap_[YYYY]_ESA_LUH2v2h_withNoBio_v1.2.nc|YYYY?%Y%@PFTmap_025.nc:PFTmap_IPCC_1850.nc@PFTmap_IPCC.nc:reftemp.nc@reftemp.nc:soils_param.nc@soils_param.nc:soils_param_usda.nc@soils_param_usda.nc:soils_param_usdatop.nc@soils_param_usdatop.nc:routing.nc@routing.nc |

+ | # Head and tail of indata files names. |

| + | # Assuming ${indataFheader}*[YYYY][MM]*${indataFtail}.[grib/nc] |

||

| + | indataFheader=ERAI_ |

||

| + | indataFtail= |

||

</pre> |

</pre> |

||

| − | Folder with the atmospheric forcing data (located at <code>[forcingHOST]</code>). |

+ | In case of netCDF input data, there is a bash script which transforms the data to grib, to be used later by <code>ungrib</code> |

| − | <pre> |

||

| − | # Folder which contents the atmospheric data to force the model (here an example for CRU-NCEP v5.4 half degree at <code>hydra</code>) |

||

| − | iniatmosHOME = /share/ORCHIDEE/data/IGCM/SRF/METEO/CRU-NCEP/v5.4/halfdeg |

||

| − | </pre> |

||

| − | Files to be used as atmospheric forcings (It uses the same complex bash script as in the restarts). Files must be located at <code>[forcingHOST]</code>. In this example a CRU-NCEP file which is called <code>cruncep_halfdeg_[YYYY].nc</code> (where <code>[YYYY]</code> is for a year in four digits). It is said to change the <code>[YYYY]</code> by <code>%Y%</code> which will be the year of the '''chunk''' with four digits (C-like) |

+ | Variable table to use in <code>ungrib</code> |

<pre> |

<pre> |

||

| − | # ':' list of [atmosfilen]|[NNNNN1]?[val1]:[...[NNNNNn]?[valn]]@[tpmllinkname]|[NNNNN1]?[val1]#[...[NNNNNn]?[valn]] |

+ | # Type of Vtable for ungrib as Vtable.[VtableType] |

| − | # [filenTMPL]: template of the atmospheric data file name with [NNNN] variables to be substitued |

+ | VtableType=ERA-interim.pl |

| − | # [tmpllinkname]: template name of the link of the restart file (if necessary with [NNNNN] variables to be substituted) |

||

| − | filenameTMPL = cruncep_halfdeg_[YYYY].nc|YYYY?%Y%@atmos_forcing.nc |

||

</pre> |

</pre> |

||

| − | Name of the files with the set-up of the model |

+ | Folder with the atmospheric forcing data (located at <code>[forcingHOST]</code>). |

<pre> |

<pre> |

||

| − | ## configuration files (':' separated list) |

+ | # For `nc' format |

| − | ORdef = run.def |

+ | # Folder which contents the atmospheric data to generate the initial state |

| − | ORxml = context_orchidee.xml:field_def_orchidee.xml:file_def_orchidee.xml:iodef.xml |

+ | iniatmosHOME = ./ |

| + | # Type of atmospheric data to generate the initial state |

||

| + | # `ECMWFstd': ECMWF 'standard' way ERAI_[pl/sfc][YYYY][MM]_[var1]-[var2].grib |

||

| + | # `ERAI-IPSL': ECMWF ERA-INTERIM stored in the common IPSL way (.../4xdaily/[AN\_PL/AN\_SF]) |

||

| + | iniatmosTYPE = 'ECMWFstd' |

||

</pre> |

</pre> |

||

| − | Here on can change values on the template <code>run.def</code>. It will change the values of the provided parameters with a new value. If the given parameter is not in the template of the <code>run.def</code> it will be automatically added. |

+ | Here on can change values on the template <code>namelist.input</code>. It will change the values of the provided parameters with a new value. If the given parameter is not in the template of the <code>namelist.input</code> it will be automatically added. |

<pre> |

<pre> |

||

| − | ## def,xml changes ([fileA]@[parm1]:[val1];...;[parmN]:[valN]|...|[fileZ]@....) |

+ | ## Namelist changes |

| − | nlparametres = run.def@STOMATE_OK_STOMATE:y;STOMATE_OK_CO2:y |

+ | nlparameters = ra_sw_physics;4,ra_lw_physics;4,time_step;180 |

</pre> |

</pre> |

||

| − | Name of ORCHIDEE's executable (to be localized at <code>[orHOME]</code> folder from <code>[codeHOST]</code>) |

+ | Name of WRF's executable (to be localized at <code>[orHOME]</code> folder from <code>[codeHOST]</code>) |

<pre> |

<pre> |

||

# Name of the exectuable |

# Name of the exectuable |

||

| − | nameEXEC=orchidee_ol |

+ | nameEXEC=wrf.exe |

</pre> |

</pre> |

||

| − | ':' separated list of netCDF file names from ORCHIDEE's output which do not need to be kept |

+ | ':' separated list of netCDF file names from WRF's output which do not need to be kept |

<pre> |

<pre> |

||

# netCDF Files which will not be kept anywhere |

# netCDF Files which will not be kept anywhere |

||

| Línea 277: | Línea 269: | ||

</pre> |

</pre> |

||

| − | ':' separated list of headers of netCDF file names from ORCHIDEE's output which need to be kept |

+ | ':' separated list of headers of netCDF file names from WRF's output which need to be kept |

<pre> |

<pre> |

||

# Headers of netCDF files need to be kept |

# Headers of netCDF files need to be kept |

||

| − | HkeptfileNAMES=sechiba_history:stomate_history:sechiba_history_4dim:sechiba_history_alma |

+ | HkeptfileNAMES=wrfout_d:wrfxtrm_d:wrfpress_d:wrfcdx_d |

</pre> |

</pre> |

||

| − | ':' separated list of headers of restarts netCDF file names from ORCHIDEE's output which need to be kept |

+ | ':' separated list of headers of restarts netCDF file names from WRF's output which need to be kept |

<pre> |

<pre> |

||

# Headers of netCDF restart files need to be kept |

# Headers of netCDF restart files need to be kept |

||

| − | HrstfileNAMES=sechiba_rest_out:stomate_rest_out |

+ | HrstfileNAMES=wrfrst_d |

| − | </pre> |

||

| − | |||

| − | ORCHIDEE off-line can not run with the parallel-netCDF. For that reason, output files are written for each computing node. At the end of the simulation they need to be concatenated with the tool <code>flio_rbld</code> (Already compiled in <code>hydra</code>). This is done automatically at the end of the simulation. (to be found at <code>[codeHOST]</code>) |

||

| − | <pre> |

||

| − | # Extras. rebuild program folder |

||

| − | binREBUILD = /share/modipsl_IOIPSLtools/bin |

||

</pre> |

</pre> |

||

| − | Parallel configuration of the run. '''NOTE:''' ORCHIDEE off-line can not be run using sharing memory |

+ | Parallel configuration of the run. |

<pre> |

<pre> |

||

| − | # ORCHIDEE parallel run configuration |

+ | # WRF parallel run configuration |

## Number of nodes |

## Number of nodes |

||

Nnodes = 1 |

Nnodes = 1 |

||

| Línea 300: | Línea 292: | ||

## Memory size of shared memory threads |

## Memory size of shared memory threads |

||

SIZEopenthreads = 200M |

SIZEopenthreads = 200M |

||

| + | ## Memory for PBS jobs |

||

| + | MEMjobs = 30gb |

||

</pre> |

</pre> |

||

Última revisión de 12:53 27 sep 2023

There is a far more powerful tool to manage work-flow of WRF4G.

Lluís developed a less powerful one which is here described

WRF work-flow management is done via 5 scripts (these are the specifics for hydra [CIMA cluster]):

-

EXPERIMENTparameters.txt: General ASCII file which configures the experiment and chain of simulations (chunks). This is the unique file to modify -

wrf4l_experiment.pbs: PBS-queue job which prepares the experiment of the environment -

wrf4l_WPS.pbs: PBS-queue job which launch the WPS section of the model:ungrib.exe,metgrid.exe,real.exe -

wrf4l_WRF.pbs: PBS-queue job which launch thewrf.exe - There is a folder called

componentswith shell and python scripts necessary for the work-flow management

An experiment which contains a period of simulation is divided by chunks small pieces of times which are manageable by the model. The work-flow follows these steps using run_experiments.pbs:

- Copy and link all the required files for a given chunk of the whole period of simulation following the content of

EXPERIMENTparameters.txt - Launches

wrf4l_WPS.pbswhich will produce the necessary files for the period of the given chunk - Launches

wrf4l_WRF.pbswhich will simulated the period of the given chunk (which waits until the end ofrun_WPS.pbs) - Launches the next

wrf4l_experiments.pbs(which waits until the end ofwrf4l_WRF.pbs)

All the scripts are located in hydra at:

/share/tools/workflows/WRF4L/hydra

Contenido |

[editar] How to simulate

- Creation of a new folder from where launch the experiment [ExperimentName] (g.e. somewhere at $HOME)

$ mkdir [ExperimentName] cd [ExperimentName]

- copy the WRF4L files to this folder

cp /share/tools/workflows/WRF4L/hydra/EXPERIMENTparameters.txt ./ cp /share/tools/workflows/WRF4L/hydra/wrf4l_experiment.pbs ./ cp /share/tools/workflows/WRF4L/hydra/wrf4l_WPS.pbs ./ cp /share/tools/workflows/WRF4L/hydra/wrf4l_WRF.pbs ./

- Edit the configuration/set-up of the simulation of the experiment

$ vim EXPERIMENTparameters.txt

- Launch the simulation of the experiment

$ qsub wrf4l_experiment.pbs

When it is running one would have (runnig WRF job wrf_[SimName] `R', and exp_[SimName] in hold `H'):

$ qstat -u $USER

hydra:

Req'd Req'd Elap

Job ID Username Queue Jobname SessID NDS TSK Memory Time S Time

-------------------- -------- -------- ---------------- ------ ----- --- ------ ----- - -----

397.hydra lluis.fi larga wps_ 27567 1 16 20gb 168:0 R --

398.hydra lluis.fi larga wrf_ -- 1 1 20gb 168:0 H --

399.hydra lluis.fi larga exp_ -- 1 1 2gb 168:0 H --

In case of crash of the simulation, after fixing the issue, go to [runHOME]/[ExpName]/[SimName] and re-launch the experiment (after the first run the scratch is switched automatically to `false')

$ qsub wrf4l_experiment.pbs

[editar] Checking the experiment

Once the experiment runs, one needs to look on (following name of the variables from EXPERIMENTparameters.txt

-

[runHOME]/[ExpName]/[SimName]: Will content the copies of the templatesnamelist.wps,namelist.inputand a filechunk_attemps.infwhich counts how many times a chunk has been attempted to be run (if it reached 4 times, theWRF4Lis stopped) -

[runHOME]/[ExpName]/[SimName]/run: actual folder where the computing nodes run the model. In a folder calledwrfoutthere is a folder for each chunk with the standard output of the model -

[runHOME]/[ExpName]/[SimName]/run/outwrf/[YYYYi][MMi][DDi][HHi][MIi][SSi]-[YYYYf][MMf][DDf][HHf][MIf][SSf]: folder with the standard output and all the required files to run a given chunk. The content of all this folder is compressed and kept in[storageHOME]/[ExpName]/[SimName]/config_[YYYYi][MMi][DDi][HHi][MIi][SSi]-[YYYYf][MMf][DDf][HHf][MIf][SSf].tar.gz -

[storageHOME]/[ExpName]/[SimName](in [storageHOST]): output of the already ran chunks as[YYYYi][MMi][DDi][HHi][MIi][SSi]-[YYYYf][MMf][DDf][HHf][MIf][SSf]for a chunk from[YYYYi]/[MMi]/[DDi] [HHi]:[MIi]:[SSi]to[YYYYf]/[MMf]/[DDf] [HHf]:[MIf]:[SSf]

[editar] When something went wrong

If there has been any problem check the last chunk (in outwrf/[PERIODchunk]) to try to understand what happens and where the problem comes from:

-

rsl.[error/out].[nnnn]: These are the files which content the standard output while running the model. One file for each process. If the problem was something related to model execution and it has been prepared for the error, a correct message must appear. (look first for the largest files...

$ ls -lrS rsl.error.*

-

run_wrf.log: These are the files which content the standard output of the model. Search for `segmentation faults' in form of (it might differ):

forrtl: error (63): output conversion error, unit -5, file Internal Formatted Write Image PC Routine Line Source wrf.exe 00000000032B736A Unknown Unknown Unknown wrf.exe 00000000032B5EE5 Unknown Unknown Unknown wrf.exe 0000000003265966 Unknown Unknown Unknown wrf.exe 0000000003226EB5 Unknown Unknown Unknown wrf.exe 0000000003226671 Unknown Unknown Unknown wrf.exe 000000000324BC3C Unknown Unknown Unknown wrf.exe 0000000003249C94 Unknown Unknown Unknown wrf.exe 00000000004184DC Unknown Unknown Unknown libc.so.6 000000319021ECDD Unknown Unknown Unknown wrf.exe 00000000004183D9 Unknown Unknown Unknown

- on

[runHOME]/[ExpName]/[SimName], check the output of the PBS jobs. Which are called:-

exp-[SimName].o[nnnn]: output of thewrf4l_experiment.pbs -

wps-[SimName].o[nnnn]: output of thewrf4l_WPS.pbs -

wrf-[SimName].o[nnnn]: output of thewrf4l_WRF.pbs

-

- Check

[runHOME]/[ExpName]/[SimName]/run/namelist.outputwhich holds all the parameters (even the default ones) used in the simulation

[editar] EXPERIMENTSparameters.txt

This ASCII file configures all the simulation. It assumes:

- Required files, forcings, storage, compiled version of the code might be at different machines.

- There is a folder with a given template version of the

namelist.inputwhich will be used and changed accordingly to the requirement of the experiments

Location of the WRF4L main folder (example for hydra)

# Home of the WRF4L wrf4lHOME=/share/workflows/WRF4L

Name of the machine where the experiment is running (NOTE: a folder with specific work-flow must exist as $wrf4lHOME/${HPC})

# Machine specific work-flow files for $HPC (a folder with specific work-flow must exist as $wrf4lHOME/${HPC})

HPC=hydra

Name of the compiler used to compile the model (NOTE: a file called $wrf4lHOME/arch/${HPC}_${compiler}.env must exist)

# Compilation (a file called $wrf4lHOME/arch/${HPC}_${compiler}.env must exist)

compiler=intel

Name of the experiment

# Experiment name ExpName = WRFsensSFC

Name of the simulation. Here is understood that a given experiment could have the model configured with different set-ups (here identified with a different name of simulation)

# Simulation name SimName = control

Which binary of python 2.x to be used

# python binary pyBIN=/home/lluis.fita/bin/anaconda2/bin/python2.7

Should this simulation be run from the beginning or not. If it is set to `true', it will remove all the pre-existing content of the folder [ExpName]/[SimName] in the running and in the storage spaces. Be careful. In case of `false' simulation will continue from the last successful ran chunk (checking the restart files).

# Start from the beginning (keeping folder structure) scratch = false

Period of the simulation of the simulation (In this example from 1958 Jan 1st to 2015 Dec 31)

# Experiment starting date exp_start_date = 19790101000000 # Experiment ending date exp_end_date = 20150101000000

Length of the chunks (do not make chunks larger than 1-month!!)

# Chunk Length [N]@[unit] # [unit]=[year, month, week, day, hour, minute, second] chunk_length = 1@month

Selection of the machines and users to each machine where the different requirement files are located and the output should be placed.

- NOTE: this will only work if one set-up the

.sshpublic/private keys in each involved USER/HOST. - NOTE 2: All the forcings, compiled code, ... are already at

hydraat the common space calledshare - NOTE 3: From the computing nodes, one can not access to the

/sharefolder and to any of the CIMA's storage machines: skogul, freyja, ... For that reason, one need to use these system of[USER]@[HOST]accounts.*.pbsscripts uses a series of wrappers of the standard functions:cp, ln, ls, mv, ....which manage them `from' and `to' different pairs of[USER]@[HOST]. NOTE: This will only work if the public/private ssh key pairs have been set-up (see more details at llaves_ssh)

# Hosts # list of different hosts and specific user # [USER]@[HOST] # NOTE: this will only work if public keys have been set-up ## # Host with compiled code, namelist templates codeHOST=lluis.fita@hydra # forcing Host with forcings (atmospherics and morphologicals) forcingHOST=lluis.fita@hydra # output Host with storage of output (including restarts) outHOST=lluis.fita@hydra

Templates of the configuration of WRF: namelist.wps, namelist.input files. NOTE: they will be changed according to the content of EXPERIMENTparameters.txt like period of the chunk, atmospheric forcing, differences of the set-up, ... (located in the [codeHOST]

# Folder with the `namelist.wps', `namelist.input' and `geo_em.d[nn].nc' of the experiment domainHOME = /home/lluis.fita/salidas/estudios/dominmios/SA50k

Folder where the WRF model will run in the computing nodes (on top of that there will be two more folders [ExpName]/[SimName]). WRF will run at the folder [ExpName]/[SimName]/run

# Running folder runHOME = /home/lluis.fita/estudios/WRFsensSFC/sims

Folder with the compiled version of the WPS (located at [codeHOST])

# Folder with the compiled source of WPS wpsHOME = /share/WRF/WRFV3.9.1/ifort/dmpar/WPS

Folder with the compiled version of the WRF (located at [codeHOST])

# Folder with the compiled source of WRF wrfHOME = /share/WRF/WRFV3.9.1/ifort/dmpar/WRFV3

Folder to storage all the output of the model (history files, restarts and compressed file with content of the configuration and the standard output of the given run). The content of the folder will be organized by chunks (located at [storageHOST])

# Storage folder of the output storageHOME = /home/lluis.fita/salidas/estudios/WRFsensSFC/sims/output

Wether modules should be load (not used for hydra)

# Modules to load ('None' for any)

modulesLOAD = None

Names of the files used to check that the chunk has properly ran

# Model reference output names (to be used as checking file names) nameLISTfile = namelist.input # namelist nameRSTfile = wrfrst_d01_ # restart file nameOUTfile = wfrout_d01_ # output file

Extensions of the files with the configuration of WRF (to be retrieved from codeHOST and domainHOME)

# Extensions of the files with the configuration of the model configEXTS = wps:input

To continue from a previous chunk one needs to use the `restart' files. But they need to be renamed, because otherwise they will be re-written. Here one specifies the original name of the file [origFile] and the name to be used to avoid the re-writting [destFile]. It uses a complex bash script which even can deal with the change of dates according to the period of the chunk (':' list of [origFile]@[destFile]). They will located at the [storageHOST]

# restart file names # ':' list of [tmplrstfilen|[NNNNN1]?[val1]#[...[NNNNNn]?[valn]]@[tmpllinkname]|[NNNNN1]?[val1]#[...[NNNNNn]?[valn]] # [tmplrstfilen]: template name of the restart file (if necessary with [NNNNN] variables to be substituted) # [NNNNN]: section of the file name to be automatically substituted # `[YYYY]': year in 4 digits # `[YY]': year in 2 digits # `[MM]': month in 2 digits # `[DD]': day in 2 digits # `[HH]': hour in 2 digits # `[SS]': second in 2 digits # `[JJJ]': julian day in 3 digits # [val]: value to use (which is systematically defined in `run_OR.pbs') # `%Y%': year in 4 digits # `%y%': year in 2 digits # `%m%': month in 2 digits # `%d%': day in 2 digits # `%h%': hour in 2 digits # `%s%': second in 2 digits # `%j%': julian day in 3 digits # [tmpllinkname]: template name of the link of the restart file (if necessary with [NNNNN] variables to be substituted) rstFILES=wrfrst_d01_[YYYY]-[MM]-[DD]_[HH]:[MI]:[SS]|YYYY?%Y#MM?%m#DD?%d#HH?%H#MI?%M#SS?%S@wrfrst_d01_[YYYY]-[MM]-[DD]_[HH]:[MI]:[SS]|YYYY?%Y#MM?%m#DD?%d#HH?%H#MI?%M#SS?%S

Folder with the input data (located at [forcingHOST]).

# Folder with the input morphological forcing data indataHOME = /share/DATA/re-analysis/ERA-Interim

Format of the input data and name of files

# Data format (grib, nc)

indataFMT= grib

# For `grib' format

# Head and tail of indata files names.

# Assuming ${indataFheader}*[YYYY][MM]*${indataFtail}.[grib/nc]

indataFheader=ERAI_

indataFtail=

In case of netCDF input data, there is a bash script which transforms the data to grib, to be used later by ungrib

Variable table to use in ungrib

# Type of Vtable for ungrib as Vtable.[VtableType] VtableType=ERA-interim.pl

Folder with the atmospheric forcing data (located at [forcingHOST]).

# For `nc' format # Folder which contents the atmospheric data to generate the initial state iniatmosHOME = ./ # Type of atmospheric data to generate the initial state # `ECMWFstd': ECMWF 'standard' way ERAI_[pl/sfc][YYYY][MM]_[var1]-[var2].grib # `ERAI-IPSL': ECMWF ERA-INTERIM stored in the common IPSL way (.../4xdaily/[AN\_PL/AN\_SF]) iniatmosTYPE = 'ECMWFstd'

Here on can change values on the template namelist.input. It will change the values of the provided parameters with a new value. If the given parameter is not in the template of the namelist.input it will be automatically added.

## Namelist changes nlparameters = ra_sw_physics;4,ra_lw_physics;4,time_step;180

Name of WRF's executable (to be localized at [orHOME] folder from [codeHOST])

# Name of the exectuable nameEXEC=wrf.exe

':' separated list of netCDF file names from WRF's output which do not need to be kept

# netCDF Files which will not be kept anywhere NokeptfileNAMES=''

':' separated list of headers of netCDF file names from WRF's output which need to be kept

# Headers of netCDF files need to be kept HkeptfileNAMES=wrfout_d:wrfxtrm_d:wrfpress_d:wrfcdx_d

':' separated list of headers of restarts netCDF file names from WRF's output which need to be kept

# Headers of netCDF restart files need to be kept HrstfileNAMES=wrfrst_d

Parallel configuration of the run.

# WRF parallel run configuration

## Number of nodes

Nnodes = 1

## Number of mpi procs

Nmpiprocs = 16

## Number of shared memory threads ('None' for no openMP threads)

Nopenthreads = None

## Memory size of shared memory threads

SIZEopenthreads = 200M

## Memory for PBS jobs

MEMjobs = 30gb

Generic definitions

## Generic errormsg=ERROR -- error -- ERROR -- error warnmsg=WARNING -- warning -- WARNING -- warning