CDXWRFopt

(→Optimization) |

|||

| (No se muestra una edición intermedia realizada por un usuario) | |||

| Línea 1: | Línea 1: | ||

= Optimization = |

= Optimization = |

||

| − | |||

| − | ''' UNDER CONSTRUCTION ''' |

||

Regional climate dynamical downscaling experiments like the ones under the scope of CORDEX require long continuous simulations which consume larger amounts of HPC resource for a long period of time. Therefore, a series of tests were carried out in order to investigate the impact on the time of integration when module is activated. First version of the module (v1.0) was known to introduce about 40% decrease in time step speed of integration (highly dependent on HPC, model configuration and domain specifications). In order to improve model performance when the module is activated, the module was upgraded to the version v1.1. Since this new version, a series of optimizations of the code and pre-compilation flags were activated (<CODE>CDXWRF</CODE>). Following this implementation (instead via regular WRF namelist options), two main goals were achieved: (1) the amount of variables kept in memory during model execution was reduced and (2) the number of conditions (mainly avoiding IF statements) to be checked and calculations at each execution step of integration were reduced as well. |

Regional climate dynamical downscaling experiments like the ones under the scope of CORDEX require long continuous simulations which consume larger amounts of HPC resource for a long period of time. Therefore, a series of tests were carried out in order to investigate the impact on the time of integration when module is activated. First version of the module (v1.0) was known to introduce about 40% decrease in time step speed of integration (highly dependent on HPC, model configuration and domain specifications). In order to improve model performance when the module is activated, the module was upgraded to the version v1.1. Since this new version, a series of optimizations of the code and pre-compilation flags were activated (<CODE>CDXWRF</CODE>). Following this implementation (instead via regular WRF namelist options), two main goals were achieved: (1) the amount of variables kept in memory during model execution was reduced and (2) the number of conditions (mainly avoiding IF statements) to be checked and calculations at each execution step of integration were reduced as well. |

||

| Línea 116: | Línea 114: | ||

|} |

|} |

||

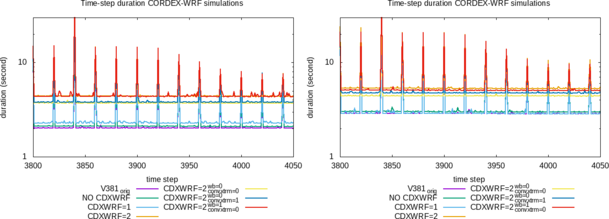

| − | [[Archivo:CDXWRF_Torge-short_ifort-gcc.png|frame|50px|Figure 2: Elapsed times for each individual time step integration on nested domain d02 (time steps from number 3800 [simulating date 2014-01-01 15:35:00 UTC] to 4050 [2014-01-01 16:37:30 UTC]). Model was ran with different module configurations. See text for more details. Larger time steps are related to activation of the short/long-wave radiation scheme (every 5 minutes). For WRF compilation using ‘ifort’ (left) and ‘gcc’ compilers (right) |

+ | [[Archivo:CDXWRF_Torge-short_ifort-gcc.png|frame|50px|Figure 2: Elapsed times for each individual time step integration on nested domain d02 (time steps from number 3800 [simulating date 2014-01-01 15:35:00 UTC] to 4050 [2014-01-01 16:37:30 UTC]). Model was ran with different module configurations. See text for more details. Larger time steps are related to activation of the short/long-wave radiation scheme (every 5 minutes). For WRF compilation using ‘ifort’ (left) and ‘gcc’ compilers (right)]] |

Back to the main page [[CDXWRF]] |

Back to the main page [[CDXWRF]] |

||

Última revisión de 18:41 27 feb 2019

[editar] Optimization

Regional climate dynamical downscaling experiments like the ones under the scope of CORDEX require long continuous simulations which consume larger amounts of HPC resource for a long period of time. Therefore, a series of tests were carried out in order to investigate the impact on the time of integration when module is activated. First version of the module (v1.0) was known to introduce about 40% decrease in time step speed of integration (highly dependent on HPC, model configuration and domain specifications). In order to improve model performance when the module is activated, the module was upgraded to the version v1.1. Since this new version, a series of optimizations of the code and pre-compilation flags were activated (CDXWRF). Following this implementation (instead via regular WRF namelist options), two main goals were achieved: (1) the amount of variables kept in memory during model execution was reduced and (2) the number of conditions (mainly avoiding IF statements) to be checked and calculations at each execution step of integration were reduced as well.

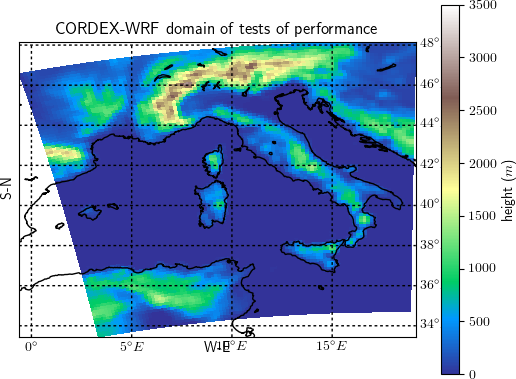

A domain as shown in figure 1 has been set-up to perform short runs (5 days) to check the changes in performance of the WRF model when the module is activated in its different possible configurations. In order to avoid non-homogeneous communication among the cluster nodes (which would affect the analysis), all the simulations were executed on a single node and with the WRF model compiled with intel and GNU Fortran compilers. Tests are performed at the HPC [Fram] from the Norwegian academic HPC infrastructure. Fram is based on Lenovo NeXtScale nx360, constituted by CPU types: Intel E5-2683v4 2.1 GHz and Intel E7-4850v4 2.1 GHz.

The execution time is calculated as the mean elapsed time used during the 5-day model integration. Elapsed time necessary for each simulation step is available from the standard output of the model run (rsl.error.0000 WRF ASCII file). In this file, WRF users can get the elapsed time for all the time steps of the model and domains of simulation. Different peaks of slower time steps (see in figure 2) coincide with Input/Output file operations, difference between day and night regimes and when different physical schemes (mainly the radiative scheme) are activated on a given frequency (e.g. radt). For a simulation covering 5-days with a time steps of 15 seconds, one obtains 28800 time steps. The sample of 28800 time steps is considered to be large enough to be representative for the mean time-step of the whole simulation.

Table 1 describes the different configurations and namelist options used in this performance test. The first simulation (labeled V381orig), which is used as reference, is the simulation with the original version of the model (here version 3.8.1) without the module. The other simulations are: activation of the module (setting CORDEXDIAG) without setting of the pre-compilation parameter CDXWRF (labeled NOCDXWRF); with pre-compilation parameter CDXWRF=1 (CDXWRF1); with pre-compilation parameter CDXWRF=2 and computing all extra calculations (CDXWRF2). Finally three more simulations (with CDXWRF=2) are made: without any extra calculation (CDXWRF2_00), without calculation of extra water-budget terms (CDXWRF2_01) and without extremes from convection indices (CDXWRF2_10). Results might present some inconsistencies due to the fact that certain computation of diagnostics depend on the stability at each grid point which might vary from run to run and work load of the HPC.

Results show that all the simulations (except CDXWRF1 with gcc) where the module has been activated are slower than

the simulation with the original version of the code (v381orig<I>, <t>=2.4248[if ort], 3.5174[gcc] s). Simulation with version 1.3 of the module without pre-compilation flag CDXWRF (<I>NOCDXWRF, <t>=2.5058, 3.6486 s) is the second fastest. Simulation becomes slower when all the extra calculations are performed (CDXWRF2 <t>= 4.8296, 5.9958 s). The heaviest part of the module is related to the water budget computation (wb_diag=1), since when comparing to the simulation without extra calculations (CDXWRF2_00, <t>= 4.2038, 5.0736 s) there is an increase of only about 1, 9% (<tstep>ifortCDXWRF2_01 / <tstep>ifortCDXWRF2_00, <tstep>gccCDXWRF2_01 / <tstep>gccCDXWRF2_00) of mean time step when only statistics of extreme convective indices is activated (CDXWRF2_01, <t>= 4.2388, 5.4120s), and 27, 19% (<tstep>CDXWRF2_10/ <tstep>CDXWRF2_00|ifort,gcc) when only water-budget terms are included (CDXWRF2_10, <t>= 4.8510, 5.7534 s). Reduction on time-step for CDXWRF1 with gcc must be related to a moment where HPC `Fram' experiences a period of very low working load.

These results are not conclusive (it should be tested in other HPC resources, domains and compilers), but they provide a first insight on how the number of variables included during the integration (in the derived type grid) has an important effect on model performance by reducing/increasing the required amount of memory. CDXWRF1 and CDXWRF2_00 perform the same amount of computations of diagnostics, but the mean time step in CDXWRF2_00 is almost doubled (because in the CDXWRF2_00 case all the additional variables are defined but not diagnosed).

| Domain characteristic | value |

|---|---|

| projection | rotated lat-lon |

| central point | N 38.05220°, 1.07623° E |

| refx,y | 118, 99 |

| polelat,lon | N 39.25000°, 18.00000° E |

| standard longitude | 18.00000° W |

| grid-points (d01, d02) | 360 × 351, 491 × 441 |

| resolution (D01, d02) | 0.13750x0.13750°, 0.0275x0.0275° |

| vertical levels | 50 |

| time-step | 15 s |

| d01 grid point ref. for d02 | 118, 99 |

| ifort | gcc | ||||

|---|---|---|---|---|---|

| label | description | <tstep> (s) | diff. (%) | <tstep> (s) | diff. (%) |

| v381orig | original WRF 3.8.1 version of the code | 2.4248 | - | 3.5174 | |

| NOCDXWRF | without CDXWRF | 2.5058 | 3.34 | 3.6486 | 3.73 |

| CDXWRF1 | CDXWRF=1 | 2.6938 | 11.09 | 3.5070 | -0.27 |

| CDXWRF2 | CDXWRF=2 | 4.8296 | 99.17 | 5.9958 | 70.46 |

| CDXWRF2_00 | CDXWRF=2 wb_diag=0 & convxtrm_diag=0 | 4.2038 | 73.37 | 5.0736 | 44.24 |

| CDXWRF2_01 | CDXWRF=2 wb_diag=0 & convxtrm_diag=1 | 4.2388 | 74.81 | 5.4120 | 53.86 |

| CDXWRF2_10 | CDXWRF=2 wb_diag=1 & convxtrm_diag=0 | 4.8510 | 100.06 | 5.7534 | 63.57 |

Back to the main page CDXWRF