WRF

(→WPS) |

(→WPS) |

||

| Línea 169: | Línea 169: | ||

== WPS == |

== WPS == |

||

| − | Let's assume that we work in a folder called <code>WORKDIR</code> (at users' <code>${HOME}</code> at <code>hydra</code>) and we will work with a given WRF version located at <code>WRFversion</code>. As example, let's create a two nested domain at ''30 km'' for the entire South America and a second one at ''4.285 km'' for Córdoba mountain ranges (see the [[WRF/namelist.wps]]) |

+ | Let's assume that we work in a folder called <code>$WORKDIR</code> (at users' <code>${HOME}</code> at <code>hydra</code>) and we will work with a given WRF version located at <code>$WRFversion</code>. As example, let's create a two nested domain at ''30 km'' for the entire South America and a second one at ''4.285 km'' for Córdoba mountain ranges (see the [[WRF/namelist.wps]]) |

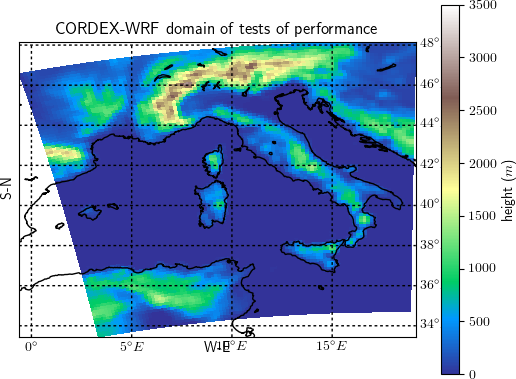

[[File:CDXWRF_domain_test.png|frame|50px|WRF two domain configuration 30 and 4.285 km]] |

[[File:CDXWRF_domain_test.png|frame|50px|WRF two domain configuration 30 and 4.285 km]] |

||

| Línea 178: | Línea 178: | ||

* Creation of a folder for the geogrid section |

* Creation of a folder for the geogrid section |

||

| − | <pre style="shell">$ cd WORKDIR |

+ | <pre style="shell">$ cd $WORKDIR |

$ mkdir geogrid |

$ mkdir geogrid |

||

$ cd geogrid |

$ cd geogrid |

||

| Línea 185: | Línea 185: | ||

* Take the necessary files (do not forget <code>opt_geogrid_tbl_path = './'</code>, into <code>&geogrid</code> section) in namelist !!): |

* Take the necessary files (do not forget <code>opt_geogrid_tbl_path = './'</code>, into <code>&geogrid</code> section) in namelist !!): |

||

<pre style="shell"> |

<pre style="shell"> |

||

| − | $ ln -s WRFversion/WPS/geogrid/geogrid.exe ./ |

+ | $ ln -s $WRFversion/WPS/geogrid/geogrid.exe ./ |

| − | $ ln -s WRFversion/WPS/geogrid/GEOGRID.TBL.ARW ./GEOGRID.TBL |

+ | $ ln -s $WRFversion/WPS/geogrid/GEOGRID.TBL.ARW ./GEOGRID.TBL |

| − | $ cp WRFversion/WPS/geogrid/namelist.wps ./ |

+ | $ cp $WRFversion/WPS/geogrid/namelist.wps ./ |

</pre> |

</pre> |

||

| Línea 211: | Línea 211: | ||

** <code>HGT_M</code>: orographical height |

** <code>HGT_M</code>: orographical height |

||

| − | * '''ungrib''': unpack grib files |

+ | === ungrib === |

| − | ** Creation of the folder (from <code>$WORKDIR</code>) |

+ | Prepare and unpack grib files from the GCM forcing |

| − | <pre>$ mkdir ungrib |

+ | |

| − | $ cd ungrib</pre> |

+ | * Creation of the folder (from <code>$WORKDIR</code>) |

| − | ** Linking necessary files from compiled source |

+ | |

| − | <pre>$ ln -s /share/WRF/WRFV3.9.1/ifort/dmpar/WPS/ungrib/ungrib.exe ./ |

+ | <pre style="shell"> |

| − | $ ln -s /share/WRF/WRFV3.9.1/ifort/dmpar/WPS/link_grib.csh ./</pre> |

+ | $ mkdir ungrib |

| − | ** Creation of a folder for the necessary GRIB files and linking the necessary files (4 files per month) |

+ | $ cd ungrib |

| − | <pre>$ mkdir GribDir |

+ | </pre> |

| + | |||

| + | * Linking necessary files from compiled source |

||

| + | <pre stye="shell"> |

||

| + | $ ln -s $WRFversion/WPS/ungrib/ungrib.exe ./ |

||

| + | $ ln -s $WRFversion/WPS/link_grib.csh ./ |

||

| + | $ cp ../geogrid/namelist.wps ./ |

||

| + | </pre> |

||

| + | |||

| + | * Edit the namelist <code>namelist.wps</code> to set up the right period of simulation and frequency of forcing (you can also use nano, emacs, ...) |

||

| + | <pre style="shell"> |

||

| + | $ vim namelist.wps |

||

| + | </pre> |

||

| + | |||

| + | * Creation of a folder for the necessary GRIB files and linking the necessary files (eg. 4 files per month) from a folder with all the necesary data called $inDATA |

||

| + | <pre style="shell"> |

||

| + | $ mkdir GribDir |

||

$ cd GribDir |

$ cd GribDir |

||

| − | $ ln -s /share/DATA/re-analysis/ERA-Interim/*201212*.grib ./ |

+ | $ ln -s $inDATA/*201212*.grib ./ |

| − | $ cd ..</pre> |

+ | $ cd .. |

| − | ** Re-link files for WRF with its own script |

+ | </pre> |

| − | <pre>./link_grib.csh GribDir/*</pre> |

+ | |

| − | ** Should appear: |

+ | * Re-link files for WRF with its own script |

| − | <pre>$ ls GRIBFILE.AA* |

+ | <pre style="shell"> |

| − | GRIBFILE.AAA\ GRIBFILE.AAB GRIBFILE.AAC GRIBFILE.AAD</pre> |

+ | ./link_grib.csh GribDir/* |

| − | ** We need to provide equivalences of the GRIB codes to the real variables. WRF comes with already defined GRIB equivalencies from different sources in folder <code>Variable_Tables</code>. In our case we use ECMWF ERA-Interim at pressure levels, thus we link |

+ | </pre> |

| − | <pre>ln -s /share/WRF/WRFV3.9.1/ifort/dmpar/WPS/ungrib/Variable_Tables/Vtable.ERA-interim.pl ./Vtable</pre> |

+ | |

| − | ** We need to take the domain file and get the right dates |

+ | * Should appear: |

| − | <pre>$ cp ../geogrid/nameslist.wps ./</pre> |

+ | <pre style="shell"> |

| − | ** Files can be unpacked |

+ | $ ls GRIBFILE.AA* |

| − | <pre>./ungrib.exe >& run_ungrib.log</pre> |

+ | GRIBFILE.AAA\ GRIBFILE.AAB GRIBFILE.AAC GRIBFILE.AAD |

| − | ** If everything went fine, should appear: |

+ | </pre> |

| + | |||

| + | * We need to provide equivalences of the GRIB codes to the real variables. WRF comes with already defined GRIB equivalencies from different sources in folder <code>Variable_Tables</code>. In this example we use ECMWF ERA-Interim at pressure levels, thus we link |

||

| + | <pre style="shell"> |

||

| + | $ ln -s $WRFversion/WPS/ungrib/Variable_Tables/Vtable.ERA-interim.pl ./Vtable |

||

| + | </pre> |

||

| + | |||

| + | * We need to take the domain file and get the right dates |

||

| + | <pre style="shell"> |

||

| + | $ cp ../geogrid/nameslist.wps ./ |

||

| + | </pre> |

||

| + | |||

| + | * Files can be unpacked using the PBS job file (user's email has to be changed) |

||

| + | <pre style="shell"> |

||

| + | $ cp /share/WRF/launch_ungrib_intel.pbs ./ |

||

| + | $ qsub launch_ungrib_intel.pbs |

||

| + | </pre> |

||

| + | |||

| + | * If everything went fine, should appear: |

||

<pre>FILE:[YYYY]-[MM]-[DD]_[HH]</pre> |

<pre>FILE:[YYYY]-[MM]-[DD]_[HH]</pre> |

||

* And... |

* And... |

||

| Línea 245: | Línea 245: | ||

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!! |

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!! |

||

! Successful completion of ungrib. ! |

! Successful completion of ungrib. ! |

||

| − | !!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!</pre> |

+ | !!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!! |

| + | </pre> |

||

| + | |||

| + | === metgrid horizontal === |

||

| + | Horizontal interpolation of atmospheric forcing data at the domain of simulation |

||

| + | |||

| + | * Creation of the folder (from <code>$WORKDIR</code>) |

||

| + | |||

| + | <pre style="shell"> |

||

| + | $ mkdir metgrid |

||

| + | $ cd metgrid |

||

| + | </pre> |

||

| + | |||

| + | * Getting necessary files (do not forget <code> opt_metgrid_tbl_path = './'</code>, into <code>&metgrid</code> section in namelist !! to avoid: <code>ERROR: Could not open file METGRID.TBL</code>) |

||

| + | |||

| + | <pre style="shell"> |

||

| + | $ ln -s $WRFversion/WPS/metgrid/metgrid.exe ./ |

||

| + | $ ln -s $WRFversion/WPS/metgrid/METGRID.TBL.ARW ./METGRID.TBL |

||

| + | </pre> |

||

| + | |||

| + | * Getting the <code>ungrib</code> output |

||

| + | <pre style="shell"> |

||

| + | $ ln -s ../ungrib/FILE* ./ |

||

| + | </pre> |

||

| − | * '''metgrid''' horizontal interpolation of atmospheric forcing data at the domain of simulation |

||

| − | ** Creation of the folder (from <code>$WORKDIR</code>) |

||

| − | <pre>$ mkdir metgrid |

||

| − | $ cd metgrid</pre> |

||

| − | ** Getting necessary files (do not forget <code> opt_metgrid_tbl_path = './'</code>, into <code>&metgrid</code> section in namelist !! to avoid: <code>ERROR: Could not open file METGRID.TBL</code>) |

||

| − | <pre>$ ln -s /share/WRF/WRFV3.9.1/ifort/dmpar/WPS/metgrid/metgrid.exe ./ |

||

| − | $ ln -s /share/WRF/WRFV3.9.1/ifort/dmpar/WPS/metgrid/METGRID.TBL.ARW ./METGRID.TBL</pre> |

||

| − | ** Getting the <code>ungrib</code> output |

||

| − | <pre>ln -s ../ungrib/FILE* ./</pre> |

||

** Link the domains of simulation |

** Link the domains of simulation |

||

| − | <pre>$ ln -s ../geogrid/geo_em.d* ./</pre> |

+ | <pre style="shell"> |

| + | $ ln -s ../geogrid/geo_em.d* ./ |

||

| + | </pre> |

||

| + | |||

** Link the namelist from <code>ungrib</code> (to make sure we are using the same!) |

** Link the namelist from <code>ungrib</code> (to make sure we are using the same!) |

||

| − | <pre>$ ln -s ../ungrib/namelist.wps ./</pre> |

+ | <pre style="shell"> |

| − | ** Get the PBS (job queue script) to run the <code>metrid.exe</code> |

+ | $ ln -s ../ungrib/namelist.wps ./ |

| − | <pre>$ cp /share/WRF/run_metgrid.pbs ./</pre> |

+ | </pre> |

| − | ** And run it |

+ | |

| − | <pre>qsub run_metgrid.pbs</pre> |

+ | * Get the PBS (job queue script) to run the <code>metrid.exe</code> (remember to edit the user's email in the pbs job) |

| − | ** If everything went fine one should have |

+ | <pre style="shell"> |

| − | <pre>met_em.d[nn].[YYYY]-[MM]-[DD]_[HH]:[MI]:[SS].nc</pre> |

+ | $ cp /share/WRF/launch_metgrid_intel.pbs ./ |

| − | ** And... |

+ | $ qsub launch_metgrid_intel.pbs |

| − | <pre>$ tail run_metgrid.log |

+ | </pre> |

| + | |||

| + | * If everything went fine one should have |

||

| + | <pre> |

||

| + | met_em.d[nn].[YYYY]-[MM]-[DD]_[HH]:[MI]:[SS].nc |

||

| + | </pre> |

||

| + | |||

| + | * And... |

||

| + | <pre style="shell"> |

||

| + | $ tail run_metgrid.log |

||

(...) |

(...) |

||

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!! |

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!! |

||

! Successful completion of metgrid. ! |

! Successful completion of metgrid. ! |

||

| − | !!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!</pre> |

+ | !!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!! |

| + | </pre> |

||

| + | |||

| + | === real === |

||

| + | Vertical interpolation of atmospheric forcing at the domain of simulation |

||

| + | |||

| + | * Creation of the folder (from <code>$WORKDIR</code>) |

||

| + | <pre style="shell"> |

||

| + | $ mkdir run |

||

| + | $ cd run |

||

| + | </pre> |

||

| + | |||

| + | * Link all the necessary files from WRF (it already links all the necessary to run the model) |

||

| + | <pre style="shell"> |

||

| + | $ ln -s $WRFversion/WRF/run/* ./ |

||

| + | </pre> |

||

| + | |||

| + | * Remove and copy the configuration file (<code>namelist.input</code>) |

||

| + | <pre style="shell"> |

||

| + | $ rm namelist.input |

||

| + | $ cp $WRFversion/WRF/run/namelist.input ./ |

||

| + | </pre> |

||

| + | |||

| + | * Edit the file and prepare the configuration for the run (re-adapt domain, physics, dates, output....). See an example for the two nested domain here [[WRF/namelist.input]] |

||

| + | |||

| + | <pre style="shell"> |

||

| + | $ vim namelist.input |

||

| + | </pre> |

||

| + | |||

| + | * Linking the <code>metgrid</code> generated files |

||

| + | <pre style="shell"> |

||

| + | $ ln -s ../metgrid/met_em.d*.nc ./ |

||

| + | </pre> |

||

| + | |||

| + | * Getting the PBS job script for <code>real.exe</code> |

||

| − | * '''real''' vertical interpolation of atmospheric forcing at the domain of simulation |

||

| − | ** Creation of the folder (from <code>$WORKDIR</code>) |

||

| − | <pre>$ mkdir run |

||

| − | $ cd run</pre> |

||

| − | ** Link all the necessary files from WRF (it already links all the necessary to run the model) |

||

| − | <pre>$ ln -s /share/WRF/WRFV3.9.1/ifort/dmpar/WRFV3/run/* ./</pre> |

||

| − | ** Remove and copy the configuration file (<code>namelist.input</code>) |

||

| − | <pre>$ rm namelist.input |

||

| − | $ cp /share/WRF/WRFV3.9.1/ifort/dmpar/WRFV3/run/namelist.input ./</pre> |

||

| − | ** Edit the file and prepare the configuration for the run (re-adapt domain, physics, dates, output....). See an example for the two nested domain here [[WRF/namelist.input]] |

||

| − | <pre>$ vim namelist.input</pre> |

||

| − | ** Linking the <code>metgrid</code> generated files |

||

| − | <pre>ln -s ../metgrid/met_em.d*.nc ./</pre> |

||

| − | ** Getting the PBS job script for <code>real.exe</code> |

||

<pre>$ cp /share/WRF/run_real.pbs ./</pre> |

<pre>$ cp /share/WRF/run_real.pbs ./</pre> |

||

** Getting a bash script to run executables in hydra (there is an issue with <code>ulimit</code> which does not allow to run with more than one node) |

** Getting a bash script to run executables in hydra (there is an issue with <code>ulimit</code> which does not allow to run with more than one node) |

||

Revisión de 11:02 22 mayo 2023

Contenido |

Model description

Weather Research and Forecast model (WRF, Skamarock, 2008) is a limited area atmospheric model developed by a consortium of american institutions with contributions from the whole community. It is a non-hydrostatic primitive equations model used in a large variety of research areas.

Techincal aspects of the model (v 4.0) are provided in this pdf

WRF and CIMA

CIMA has an HPC called hydra to perform high intensive modelling efforts with WRF.

hydra

The HPC is part of the Servicio Nacional de Cómputo de Alta Capacidad (SNCAD).

It has been re-installed and re-sorted in Jan 2022 and it has been adapted to common configurations in other international research centers.

Selection of compilers & libraries

It does not have the module software to manage compilers and libraries, but it does have an script which allows users to select the compiler. This is done at the terminal with the script source /opt/load-libs.sh

Example selecting intel 2021.4.0, mpich and netcdf 4

$ source /opt/load-libs.sh Available libraries: 1) INTEL 2021.4.0: MPICH 3.4.2, NetCDF 4, HDF5 1.10.5, JASPER 2.0.33 2) INTEL 2021.4.0: OpenMPI 4.1.2, NetCDF 4, HDF5 1.10.5, JASPER 2.0.33 3) GNU 10.2.1: MPICH 3.4.2, NetCDF 4, HDF5 1.10.5, JASPER 2.0.33 4) GNU 10.2.1: OpenMPI 4.1.2, NetCDF 4, HDF5 1.10.5, JASPER 2.0.33 0) Exit Choose an option: 1 The following libraries, compiled with Intel 2021.4.0 compilers, were loaded: * MPICH 3.4.2 * NetCDF 4 * HDF5 1.10.5 * JASPER 2.0.33 To change it please logout and login again. To load this libraries from within a script add this line to script: source /opt/load-libs.sh 1

Compilation

Multiple different versions are available and pre-compiled in hydra, with the folloing structure:

/opt/wrf/WRF-{version}/{compiler}/{compiler-version}/{architecture}/{WRF/WPS}

Forcings

Atmospheric forcings

This will change once papa-deimos is fully operational

Provide the atmospheric conditions to the model at a given date.

- There is a shared space called

/share - At

hydraall forcings are at:

/share/DATA/

- ERA-Interim

- Thus, part of ERA-Interim forcings are:

/share/DATA/re-analysis/ERA-Interim/

- Global monthly files at 0.75° horizontal resolution and all time-steps: 00, 06, 12, 18 are labelled as:

-

ERAI_pl[YYYY][MM]_[var1]-[var2].grib: pressure levels variables (all levels).GRIBcodes as:- 129: geopotential

- 157: relative humidty

- 130: Temperature

- 131: u-wind

- 132: v-wind

-

ERAI_sfc[YYYY][MM].grib: all surface levels variables (step 0)

-

- To download data:

- Generate files from ECMWF web-page ERA-Interim

- Go to the folder in

hydra

- Global monthly files at 0.75° horizontal resolution and all time-steps: 00, 06, 12, 18 are labelled as:

cd /share/DATA/re-analysis/ERA-Interim/

- get the file (as link from ECMWF web-page (right bottom on `Download grib'), as e.g.:

$ wget https://stream.ecmwf.int/data/atls05/data/data02/scratch/_mars-atls05-a82bacafb5c306db76464bc7e824bb75-zn7P44.grib

- Rename file according to its content

$ mv _mars-atls05-a82bacafb5c306db76464bc7e824bb75-zn7P44.grib ERAI_sfc201302.grib

- ERA5

- Thus, part of ERA5 forcings are:

/share/DATA/re-analysis/ERA5/

- 'NCEP-NNRP1'

- Thus, full NCEP-NNRP1 forcings are (NOTE: no land data!!!):

/share/DATA/re-analysis/NCEP_NNRP/

Morphological forcings

Provide the geomorphological information for the domain of simulation: topography, land-use, vegetation-types, etc...

- In WRF there is a huge amount of data ans sources at different resolutions. At

hydraeverything is already there at:

/share/GEOG/

- They are ready to be use

GENERIC Model use

WRF has two main parts:

- WPS: Generation of the domain, initial and boundary condition: Runs 4 programs:

-

geogrid: domain generation -

ungrib: unpack atmospheric forcing \verb+grib+ files -

metgrid: horizontal interpolation of the unpacked atmospheric forcing files at the domain of simulation -

real: generation of the initial and boundary conditions using \verb+metgrid+ output

-

- WRF: model it self:

wrf.exe

At hydra all the code is already compiled at /opt/wrf (with folders WPS and WRFV3)

As example WRFv4.3.3 compiled with intel-compilers with distributed and shared memory

/opt/wrf/WRF-4.3.3/intel/2021.4.0/dm+sm/WRF

WPS

Let's assume that we work in a folder called $WORKDIR (at users' ${HOME} at hydra) and we will work with a given WRF version located at $WRFversion. As example, let's create a two nested domain at 30 km for the entire South America and a second one at 4.285 km for Córdoba mountain ranges (see the WRF/namelist.wps)

geogrid

This is used to generate the domain

- Creation of a folder for the geogrid section

$ cd $WORKDIR $ mkdir geogrid $ cd geogrid

- Take the necessary files (do not forget

opt_geogrid_tbl_path = './', into&geogridsection) in namelist !!):

$ ln -s $WRFversion/WPS/geogrid/geogrid.exe ./ $ ln -s $WRFversion/WPS/geogrid/GEOGRID.TBL.ARW ./GEOGRID.TBL $ cp $WRFversion/WPS/geogrid/namelist.wps ./

- Domain configuration is done via

namelist.wps(more information at: WPS user guide)

$ vim namelist.wps

Once it is defined, run it (all the necessary PBS script files are available in /share/WRF, you only need to change amount of processes and users's email):

$ cp /share/WRF/launch_geogrid_intel.pbs ./ $ qsub launch_geogrid_intel.pbs

- It will create the domain files, one for each domain

geo_em.d[nn].nc

- Some variables from the geogrid files:

-

LANDMASK: sea-land mask -

XLAT_M: latitude on mass point -

XLONG_M: longitude on mass point -

HGT_M: orographical height

-

ungrib

Prepare and unpack grib files from the GCM forcing

- Creation of the folder (from

$WORKDIR)

$ mkdir ungrib $ cd ungrib

- Linking necessary files from compiled source

$ ln -s $WRFversion/WPS/ungrib/ungrib.exe ./ $ ln -s $WRFversion/WPS/link_grib.csh ./ $ cp ../geogrid/namelist.wps ./

- Edit the namelist

namelist.wpsto set up the right period of simulation and frequency of forcing (you can also use nano, emacs, ...)

$ vim namelist.wps

- Creation of a folder for the necessary GRIB files and linking the necessary files (eg. 4 files per month) from a folder with all the necesary data called $inDATA

$ mkdir GribDir $ cd GribDir $ ln -s $inDATA/*201212*.grib ./ $ cd ..

- Re-link files for WRF with its own script

./link_grib.csh GribDir/*

- Should appear:

$ ls GRIBFILE.AA* GRIBFILE.AAA\ GRIBFILE.AAB GRIBFILE.AAC GRIBFILE.AAD

- We need to provide equivalences of the GRIB codes to the real variables. WRF comes with already defined GRIB equivalencies from different sources in folder

Variable_Tables. In this example we use ECMWF ERA-Interim at pressure levels, thus we link

$ ln -s $WRFversion/WPS/ungrib/Variable_Tables/Vtable.ERA-interim.pl ./Vtable

- We need to take the domain file and get the right dates

$ cp ../geogrid/nameslist.wps ./

- Files can be unpacked using the PBS job file (user's email has to be changed)

$ cp /share/WRF/launch_ungrib_intel.pbs ./ $ qsub launch_ungrib_intel.pbs

- If everything went fine, should appear:

FILE:[YYYY]-[MM]-[DD]_[HH]

- And...

$ tail run_ungrib.log (...) ********** Done deleting temporary files. ********** !!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!! ! Successful completion of ungrib. ! !!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

metgrid horizontal

Horizontal interpolation of atmospheric forcing data at the domain of simulation

- Creation of the folder (from

$WORKDIR)

$ mkdir metgrid $ cd metgrid

- Getting necessary files (do not forget

opt_metgrid_tbl_path = './', into&metgridsection in namelist !! to avoid:ERROR: Could not open file METGRID.TBL)

$ ln -s $WRFversion/WPS/metgrid/metgrid.exe ./ $ ln -s $WRFversion/WPS/metgrid/METGRID.TBL.ARW ./METGRID.TBL

- Getting the

ungriboutput

$ ln -s ../ungrib/FILE* ./

- Link the domains of simulation

$ ln -s ../geogrid/geo_em.d* ./

- Link the namelist from

ungrib(to make sure we are using the same!)

- Link the namelist from

$ ln -s ../ungrib/namelist.wps ./

- Get the PBS (job queue script) to run the

metrid.exe(remember to edit the user's email in the pbs job)

$ cp /share/WRF/launch_metgrid_intel.pbs ./ $ qsub launch_metgrid_intel.pbs

- If everything went fine one should have

met_em.d[nn].[YYYY]-[MM]-[DD]_[HH]:[MI]:[SS].nc

- And...

$ tail run_metgrid.log (...) !!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!! ! Successful completion of metgrid. ! !!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

real

Vertical interpolation of atmospheric forcing at the domain of simulation

- Creation of the folder (from

$WORKDIR)

$ mkdir run $ cd run

- Link all the necessary files from WRF (it already links all the necessary to run the model)

$ ln -s $WRFversion/WRF/run/* ./

- Remove and copy the configuration file (

namelist.input)

$ rm namelist.input $ cp $WRFversion/WRF/run/namelist.input ./

- Edit the file and prepare the configuration for the run (re-adapt domain, physics, dates, output....). See an example for the two nested domain here WRF/namelist.input

$ vim namelist.input

- Linking the

metgridgenerated files

$ ln -s ../metgrid/met_em.d*.nc ./

- Getting the PBS job script for

real.exe

$ cp /share/WRF/run_real.pbs ./

- Getting a bash script to run executables in hydra (there is an issue with

ulimitwhich does not allow to run with more than one node)

- Getting a bash script to run executables in hydra (there is an issue with

$ cp /share/WRF/launch_pbs.bash ./

- And run it

qsub run_real.pbs

- If everything went fine one should have (the basic ones):

wrfbdy_d[nn] wrfinput_d[nn] ...

-

wrfbdy_d01: Boundary conditions file (only for the first domain) -

wrfinput_d[nn]: Initial conditions file for each domain -

wrffdda_d[nn]: Nudging file [optional] -

wrflowinp_d[nn]: File with updating (every time-step of the atmospheric forcing) surface characteristics [optional]

-

- And...

$ tail realout/[InitialDATE]-[EndDATE]/rsl.error.0000 (...) real_em: SUCCESS COMPLETE REAL_EM INIT

WRF

- wrf Simulation (look on description of namelist for namelist configuration/specifications).

- Getting the necessary PBS job (same folder for

real)

- Getting the necessary PBS job (same folder for

$ cp /share/WRF/run_wrf.pbs ./

- And run it

qsub run_wrf.pbs

- If everything went fine one should have (the basic ones):

wrfout_d[nn]_[YYYY]-[MM]-[DD]_[HH]:[MI]:[SS] wrfrst_d[nn]_[YYYY]-[MM]-[DD]_[HH]:[MI]:[SS] ...

-

wrfout/wrfout_d[nn]_[YYYY]-[MM]-[DD]_[HH]:[MI]:[SS]: simulation output (at η=(p-ptop)/(psfc-ptop) levels) -

wrfout/wrfrst_d[nn]_[YYYY]-[MM]-[DD]_[HH]:[MI]:[SS]: restart file (to continue simulation) -

wrfout/wrfxtrm_d[nn]_[YYYY]-[MM]-[DD]_[HH]:[MI]:[SS]: file with extremes from internal integration [optional] -

wrfout/wrfpress_d[nn]_[YYYY]-[MM]-[DD]_[HH]:[MI]:[SS]: file at vertical pressure levels [optional] -

namelist.output_[YYYY]-[MM]-[DD]_[HH]:[MI]:[SS]: All the parameters used for the simulation -

stations: folder with the time-series files (tslist)

-

- While running one can check the status on regarding on the

rsl.error.0000file, e.g.:

$ tail rsl.error.0000 (...) Timing for main: time 2012-12-01_00:13:30 on domain 1: 3.86105 elapsed seconds

WRF: known errors

CFL

- But if something went wrong:

- CFL: At

wrfout/[InitialDATE]-[EndDATE]/there are the filesrsl.[error/out].[nnnn](two per cpu). Use to appearSIGSEV segmentation fault, and to look different sources, usuallycfl. e.g. (usually look for the largestrslfile (after the0000); [$ ls -rS rsl.error.*])

- CFL: At

$ wrfout/20121201000000-20121210000000/rsl.error.0009 (...) d01 2012-12-01_01:30:00 33 points exceeded cfl=2 in domain d01 at time 2012-12-01_01:30:00 hours d01 2012-12-01_01:30:00 MAX AT i,j,k: 100 94 21 vert_cfl,w,d(eta)= 5.897325 17.00738 3.2057911E-02 (...)

NO working restart

Since a given version there is a need to include a new namelist parameter (in &time_control section) [WRF restart] in order to make available the option to continue a simulation from a given restart

override_restart_timers = .true.

If we want to get values at the output files at the time of the restart, you need to add at the (&time_control section) the parameter

write_hist_at_0h_rst = .true.

Additional information

For more additional information and further details visit WRFextras

WRF4L: Lluís' WRF work-flow management

For information about Lluí's WRF work-flow management visit here WRF4L

CDXWRF: WRF for CORDEX

A new module developed in CIMA to attain CORDEX variable demands visit here CDXWRF

WRFles

LES simulations with WRF model here WRFles