WRF

Contenido |

Model description

Weather Research and Forecast model (WRF, Skamarock, 2008) is a limited area atmospheric model developed by a consortium of american institutions with contributions from the whole community. It is a non-hydrostatic primitive equations model used in a large variety of research areas.

Compilation

Model (version 3.9.1) has already been compiled in hydra

For compilation using the gcc copmilers see here WRFgcc

- From an instalation folder

[INSTALLDIR](/share/WRF, inhydra), creation of a folder structure

[INSTALLDIR]/[WRFversion]/[compiler]/[compilation_kind]/

- As example WRFv3.9.1 compiled with intel-compilers with distributed memory only

$ mkdir /share/WRF/WRFV3.9.1/ifort/dmpar/ $ cd /share/WRF/WRFV3.9.1/ifort/dmpar/

- Decompressing code source files

$ tar xvfz ../../../WRFV3.9.1.1.TAR.gz $ mv WRF WRFV3 $ tar xvfz ../../../WPS.9.1.TAR.gz

- Compilation of model (

wrf.exe,real.exe)- Going to the model source folder

cd WRFV3

- Declaration of location of netcdf libraries and large file support (> 2GB)

$ export NETCDF='/usr/local' $ export WRFIO_NCD_LARGE_FILE_SUPPORT=1

- Running configuration of the compilation and picking up

INTEL (ifort/icc)and distributed memory (dmpar) (option 15 and `basic' 1)

- Running configuration of the compilation and picking up

$ ./configure

- Making a copy of the configuration file to make two modifications

$ cp configure.wrf configure.ifort.dmpar.wrf

- Modifying the file

configure.ifort.dmpar.wrf

- Modifying the file

#DM_FC = mpif90 -f90=$(SFC) #DM_CC = mpicc -cc=$(SCC) -DMPI2_SUPPORT DM_FC = mpif90 DM_CC = mpicc -DMPI2_SUPPORT

- Using the modified file

$ cp configure.ifort.dmpar.wrf configure.wrf $ ./compile em_real >& compile.log

- If everything went fine one should have

$ ls main/*.exe main/ndown.exe main/real.exe main/tc.exe main/wrf.exe

- And empty:

$ cat -n compile.log | grep Error $ cat -n compile.log | grep undefined

- Compilation of the WRF-preprocessing (WPS:

geogrid.exe,ungrib.exe,metgrid.exe)- Going there

cd ../WPS

- Running configure and picking up 20 (

Linux x86_64, Intel compiler (dmpar_NO_GRIB2))

- Running configure and picking up 20 (

./configure

- Compiling

./compile >& compile.log

- If everything went right, one should have

$ ls */*.exe geogrid/geogrid.exe util/avg_tsfc.exe util/int2nc.exe metgrid/metgrid.exe util/calc_ecmwf_p.exe util/mod_levs.exe ungrib/g1print.exe util/g1print.exe util/rd_intermediate.exe ungrib/g2print.exe util/g2print.exe ungrib/ungrib.exe util/height_ukmo.exe

Forcings

Atmospheric forcings

Provide the atmospheric conditions to the model at a given date.

- There is a shared space called

/share - At

hydraall forcings are at:

/share/DATA/

- ERA-Interim

- Thus, part of ERA-Interim forcings are:

/share/DATA/re-analysis/ERA-Interim/

- Global monthly files at 0.75° horizontal resolution and all time-steps: 00, 06, 12, 18 are labelled as:

-

ERAI_pl[YYYY][MM]_[var1]-[var2].grib: pressure levels variables (all levels).GRIBcodes as:- 129: geopotential

- 157: relative humidty

- 130: Temperature

- 131: u-wind

- 132: v-wind

-

ERAI_sfc[YYYY][MM].grib: all surface levels variables (step 0)

-

- To download data:

- Generate files from ECMWF web-page ERA-Interim

- Go to the folder in

hydra

- Global monthly files at 0.75° horizontal resolution and all time-steps: 00, 06, 12, 18 are labelled as:

cd /share/DATA/re-analysis/ERA-Interim/

- get the file (as link from ECMWF web-page (right bottom on `Download grib'), as e.g.:

$ wget https://stream.ecmwf.int/data/atls05/data/data02/scratch/_mars-atls05-a82bacafb5c306db76464bc7e824bb75-zn7P44.grib

- Rename file according to its content

$ mv _mars-atls05-a82bacafb5c306db76464bc7e824bb75-zn7P44.grib ERAI_sfc201302.grib

- ERA5

- Thus, part of ERA5 forcings are:

/share/DATA/re-analysis/ERA5/

- 'NCEP-NNRP1'

- Thus, full NCEP-NNRP1 forcings are (NOTE: no land data!!!):

/share/DATA/re-analysis/NCEP_NNRP/

Morphological forcings

Provide the geomorphological information for the domain of simulation: topography, land-use, vegetation-types, etc...

- In WRF there is a huge amount of data ans sources at different resolutions. At

hydraeverything is already there at:

/share/GEOG/

- They are ready to be use

Model use

WRF has two main parts:

- WPS: Generation of the domain, initial and boundary condition: Runs 4 programs:

-

geogrid: domain generation -

ungrib: unpack atmospheric forcing \verb+grib+ files -

metgrid: horizontal interpolation of the unpacked atmospheric forcing files at the domain of simulation -

real: generation of the initial and boundary conditions using \verb+metgrid+ output

-

- WRF: model it self:

wrf.exe

At hydra all the code is already compiled at (with folders WPS and WRFV3:

/share/WRF/[WRFversion]/[compiler]/[compilation_kind]/

As example WRFv3.9.1 compiled with intel-compilers with distributed memory only

/share/WRF/WRFV3.9.1/ifort/dmpar/

WPS

Let's assume that we work in a folder called WORKDIR (at users' ${HOME} at hydra). As example, let's create a two nested domain at 30 km for the entire South America and a second one at 4.285 km for Córdoba mountain ranges (see the WRF/namelist.wps)

- geogrid: generation of the domain

- Creation of a folder for the geogrid section

$ mkdir geogrid $ cd geogrid

- Take the necessary files (do not forget

opt_geogrid_tbl_path = './', into&geogridsection) in namelist !!):

- Take the necessary files (do not forget

$ ln -s /share/WRF/WRFV3.9.1/ifort/dmpar/WPS/geogrid/geogrid.exe ./ $ ln -s /share/WRF/WRFV3.9.1/ifort/dmpar/WPS/geogrid/GEOGRID.TBL.ARW ./GEOGRID.TBL $ cp /share/WRF/WRFV3.9.1/ifort/dmpar/WPS/geogrid/namelist.wps ./

- Domain configuration is done via

namelist.wps(more information at: WPS user guide)

- Domain configuration is done via

vim namelist.wps

- Once it is defined, run it:

./geogrid.exe >& run_geogrid.log

- It will create the domain files, one for each domain

geo_em.d[nn].nc

- Some variables:

-

LANDMASK: sea-land mask -

XLAT_M: latitude on mass point -

XLONG_M: longitude on mass point -

HGT_M: orographical height

-

- At

hydrathe domain for SESA at 20 km forWRFsensSFCis located at

- Some variables:

home/lluis.fita/estudios/dominios/CDXWRF

- ungrib: unpack grib files

- Creation of the folder (from

$WORKDIR)

- Creation of the folder (from

$ mkdir ungrib $ cd ungrib

- Linking necessary files from compiled source

$ ln -s /share/WRF/WRFV3.9.1/ifort/dmpar/WPS/ungrib/ungrib.exe ./ $ ln -s /share/WRF/WRFV3.9.1/ifort/dmpar/WPS/link_grib.csh ./

- Creation of a folder for the necessary GRIB files and linking the necessary files (4 files per month)

$ mkdir GribDir $ cd GribDir $ ln -s /share/DATA/re-analysis/ERA-Interim/*201212*.grib ./ $ cd ..

- Re-link files for WRF with its own script

./link_grib.csh GribDir/*

- Should appear:

$ ls GRIBFILE.AA* GRIBFILE.AAA\ GRIBFILE.AAB GRIBFILE.AAC GRIBFILE.AAD

- We need to provide equivalences of the GRIB codes to the real variables. WRF comes with already defined GRIB equivalencies from different sources in folder

Variable_Tables. In our case we use ECMWF ERA-Interim at pressure levels, thus we link

- We need to provide equivalences of the GRIB codes to the real variables. WRF comes with already defined GRIB equivalencies from different sources in folder

ln -s /share/WRF/WRFV3.9.1/ifort/dmpar/WPS/ungrib/Variable_Tables/Vtable.ERA-interim.pl ./Vtable

- We need to take the domain file and get the right dates

$ cp ../geogrid/nameslist.wps ./

- Files can be unpacked

./ungrib.exe >& run_ungrib.log

- If everything went fine, should appear:

FILE:[YYYY]-[MM]-[DD]_[HH]

- And...

$ tail run_ungrib.log (...) ********** Done deleting temporary files. ********** !!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!! ! Successful completion of ungrib. ! !!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

- metgrid horizontal interpolation of atmospheric forcing data at the domain of simulation

- Creation of the folder (from

$WORKDIR)

- Creation of the folder (from

$ mkdir metgrid $ cd metgrid

- Getting necessary files (do not forget

opt_metgrid_tbl_path = './', into&metgridsection in namelist !! to avoid:ERROR: Could not open file METGRID.TBL)

- Getting necessary files (do not forget

$ ln -s /share/WRF/WRFV3.9.1/ifort/dmpar/WPS/metgrid/metgrid.exe ./ $ ln -s /share/WRF/WRFV3.9.1/ifort/dmpar/WPS/metgrid/METGRID.TBL.ARW ./METGRID.TBL

- Getting the

ungriboutput

- Getting the

ln -s ../ungrib/FILE* ./

- Link the domains of simulation

$ ln -s ../geogrid/geo_em.d* ./

- Link the namelist from

ungrib(to make sure we are using the same!)

- Link the namelist from

$ ln -s ../ungrib/namelist.wps ./

- Get the PBS (job queue script) to run the

metrid.exe

- Get the PBS (job queue script) to run the

$ cp /share/WRF/run_metgrid.pbs ./

- And run it

qsub run_metgrid.pbs

- If everything went fine one should have

met_em.d[nn].[YYYY]-[MM]-[DD]_[HH]:[MI]:[SS].nc

- And...

$ tail run_metgrid.log (...) !!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!! ! Successful completion of metgrid. ! !!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

- real vertical interpolation of atmospheric forcing at the domain of simulation

- Creation of the folder (from

$WORKDIR)

- Creation of the folder (from

$ mkdir run $ cd run

- Link all the necessary files from WRF (it already links all the necessary to run the model)

$ ln -s /share/WRF/WRFV3.9.1/ifort/dmpar/WRFV3/run/* ./

- Remove and copy the configuration file (

namelist.input)

- Remove and copy the configuration file (

$ rm namelist.input $ cp /share/WRF/WRFV3.9.1/ifort/dmpar/WRFV3/run/namelist.input ./

- Edit the file and prepare the configuration for the run (re-adapt domain, physics, dates, output....). See an example for the two nested domain here WRF/namelist.input

$ vim namelist.input

- Linking the

metgridgenerated files

- Linking the

ln -s ../metgrid/met_em.d*.nc ./

- Getting the PBS job script for

real.exe

- Getting the PBS job script for

$ cp /share/WRF/run_real.pbs ./

- Getting a bash script to run executables in hydra (there is an issue with

ulimitwhich does not allow to run with more than one node)

- Getting a bash script to run executables in hydra (there is an issue with

$ cp /share/WRF/launch_pbs.bash ./

- And run it

qsub run_real.pbs

- If everything went fine one should have (the basic ones):

wrfbdy_d[nn] wrfinput_d[nn] ...

-

wrfbdy_d01: Boundary conditions file (only for the first domain) -

wrfinput_d[nn]: Initial conditions file for each domain -

wrffdda_d[nn]: Nudging file [optional] -

wrflowinp_d[nn]: File with updating (every time-step of the atmospheric forcing) surface characteristics [optional]

-

- And...

$ tail realout/[InitialDATE]-[EndDATE]/rsl.error.0000 (...) real_em: SUCCESS COMPLETE REAL_EM INIT

WRF

- wrf Simulation (look on description of namelist for namelist configuration/specifications).

- Getting the necessary PBS job (same folder for

real)

- Getting the necessary PBS job (same folder for

$ cp /share/WRF/run_wrf.pbs ./

- And run it

qsub run_wrf.pbs

- If everything went fine one should have (the basic ones):

wrfout_d[nn]_[YYYY]-[MM]-[DD]_[HH]:[MI]:[SS] wrfrst_d[nn]_[YYYY]-[MM]-[DD]_[HH]:[MI]:[SS] ...

-

wrfout/wrfout_d[nn]_[YYYY]-[MM]-[DD]_[HH]:[MI]:[SS]: simulation output (at η=(p-ptop)/(psfc-ptop) levels) -

wrfout/wrfrst_d[nn]_[YYYY]-[MM]-[DD]_[HH]:[MI]:[SS]: restart file (to continue simulation) -

wrfout/wrfxtrm_d[nn]_[YYYY]-[MM]-[DD]_[HH]:[MI]:[SS]: file with extremes from internal integration [optional] -

wrfout/wrfpress_d[nn]_[YYYY]-[MM]-[DD]_[HH]:[MI]:[SS]: file at vertical pressure levels [optional] -

namelist.output_[YYYY]-[MM]-[DD]_[HH]:[MI]:[SS]: All the parameters used for the simulation -

stations: folder with the time-series files (tslist)

-

- While running one can check the status on regarding on the

rsl.error.0000file, e.g.:

$ tail rsl.error.0000 (...) Timing for main: time 2012-12-01_00:13:30 on domain 1: 3.86105 elapsed seconds

WRF: known errors

- But if something went wrong:

- CFL: At

wrfout/[InitialDATE]-[EndDATE]/there are the filesrsl.[error/out].[nnnn](two per cpu). Use to appearSIGSEV segmentation fault, and to look different sources, usuallycfl. e.g. (usually look for the largestrslfile (after the0000); [$ ls -rS rsl.error.*])

- CFL: At

$ wrfout/20121201000000-20121210000000/rsl.error.0009 (...) d01 2012-12-01_01:30:00 33 points exceeded cfl=2 in domain d01 at time 2012-12-01_01:30:00 hours d01 2012-12-01_01:30:00 MAX AT i,j,k: 100 94 21 vert_cfl,w,d(eta)= 5.897325 17.00738 3.2057911E-02 (...)

Additional information

For more additional information and further details visit WRFextras

WRF4L: Lluís' WRF work-flow management

For information about Lluí's WRF work-flow management visit here WRF4L

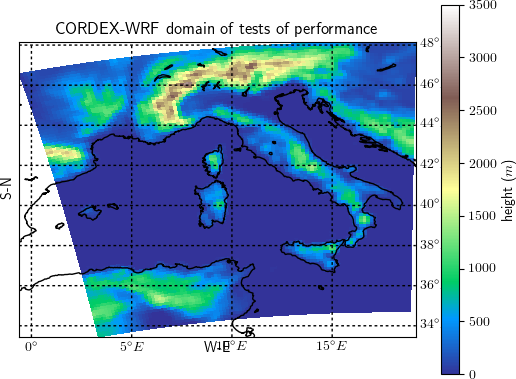

CDXWRF: WRF for CORDEX

A new module developed in CIMA to attain CORDEX variable demands visit here CDXWRF