WRFextras

Contenido |

Aerosol climatologies for Thompson aerosol aware

Since WRF v3.6 there is an option to have a micro-physics scheme with awareness on aersol densities. See more details in Thompson Aerosol-aware.

One need to add at WPS - metgrid, the files with the climatologies of aerosos. These climatologies come from GCM runs where aerosols where active. There are 3 different climatologies (QNWFA_QNIFA_Monthly_AWIP/GFS/ERA), comming from the ue of 3 different re-analysis.

In case of using ERA-Interim re-analyses, on should include on the namelist.wps

&metgrid fg_name = 'FILE', io_form_metgrid = 2, opt_metgrid_tbl_path = './' constants_name = 'QNWFA_QNIFA_Monthly_ERA' /

And then link the climatological fle (in this case the ERA-Interim) where metgrid.exe will be run

$ ln -s /share/GEOG/QNWFA_QNIFA_Monthly_ERA ./

At the namelist.input one should have

mp_physics = 28 use_aero_icbc = .T. aer_opt = 3

-

use_aero_icbcis used to specify that we want to use theQNWFA_QNIFA_Monthly_*aerosol climatologies -

aer_optis used to specify that we want the same aerosol climatologies for microphysics and radiation scheme (oonly for RRTMG)

NOTE: Since WRFv3.9 there is a new unique file for the aerosol climatologies QNWFA_QNIFA_SIGMA_MONTHLY

namelists

Here are provided some nameslits ready to be used

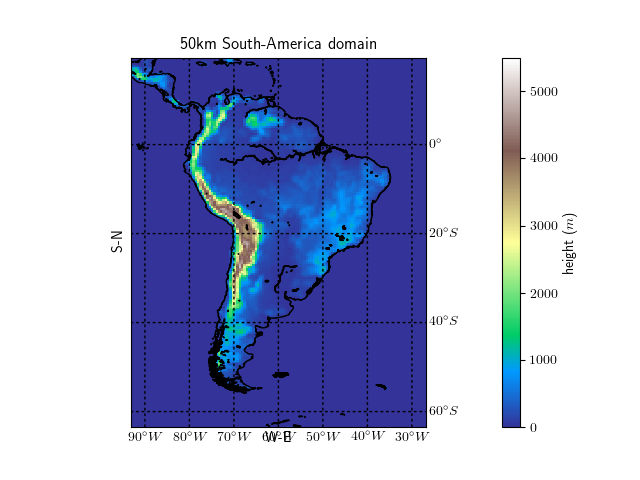

SA50k

Full South American domain at 50 km Archivo:namelist.wps.SA50k.odt, Archivo:namelist.input.SA50k.odt (NOTE: Transform to text files!)

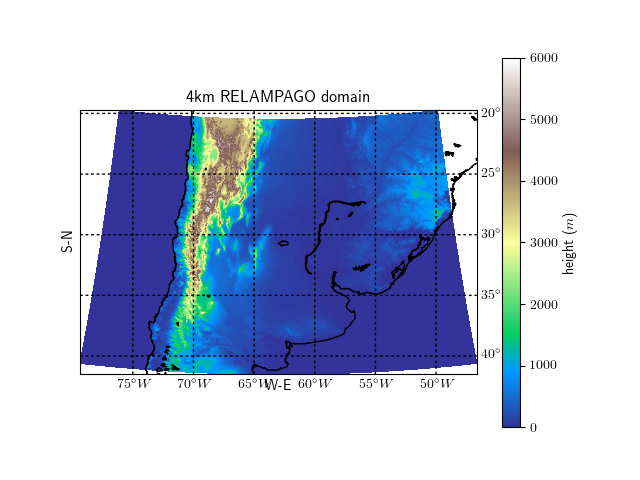

RELAMPAGO 1dom 4km

RELAMPAGO case-studies single domain at 4 km Archivo:namelist.wps.RELAMPAGO1dom.odt, Archivo:namelist.input.RELAMPAGO1dom.odt (NOTE: Transform to text files!)

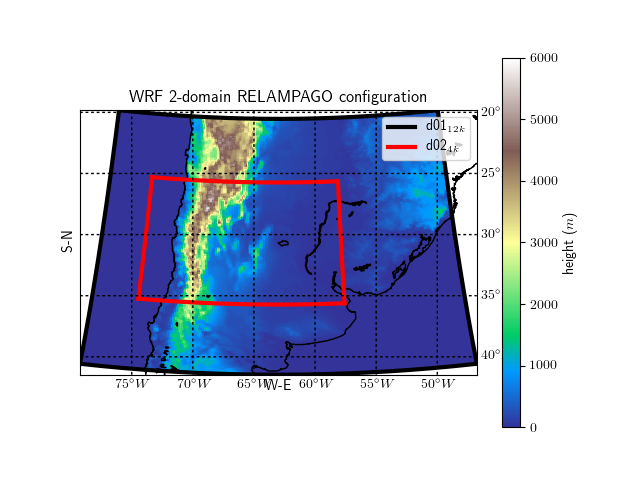

RELAMPAGO 2dom 12, 4km

RELAMPAGO case-studies nested domain at 12 and 4 km Archivo:namelist.wps.RELAMPAGO2dom.odt, Archivo:namelist.input.RELAMPAGO2dom.odt (NOTE: Transform to text files!)

gcc compilation

<hydra> has by default intel C/Fortran compilers. However, <hydra> also has the free gcc compilers.

Here are the instructions followed to compile WRF using the gcc and gfortran compilers. In order to do that, one needs to compile all the required libraries (openmpi, zlib, curl, hdf5 and netcdf) using these compilers. No udunits was installed due to issues with cmake

Here the steps starting from a folder where we will download all the sources called

/share/tools/Downloads/

These compilation steps are kept inside the file /share/tools/Downloads/compilation.inf

1.- Compiling openmpi v3.0.0.

- getting source

$ wget https://www.open-mpi.org/software/ompi/v3.0/downloads/openmpi-3.0.0.tar.gz

- Decompressing

$ tar xvfz openmpi-3.0.0.tar.gz

- Going there

$ cd openmpi-3.0.0

- Creation of the folder where the libraries will be installed

$ mkdir -p /share/tools/bin/openmpi/3.0.0/gcc

- Configuring the compilation/installation

$ ./configure --prefix=/share/tools/bin/openmpi/3.0.0/gcc >& run_configure.log

- Looking for errors in

run_configure.log. If not </code>make</code> chain (ensuring each steps goes fine)

$ make all >& run_make.log $ make test >& run_make_test.log $ make install >& run_make_install.log

For all the others libraries is similar, but paying attention to provide the right installation folder and the right location of the new compiled libraries

1.- zlib-1.2.11

src: https://zlib.net/zlib-1.2.11.tar.gz $ ./configure --prefix=/share/tools/bin/zlib/1.2.8/gcc >& run_configure.log $ make >& make.log $ make check >& make_check.log $ make install >& make_install.log

1.- hdf5-1.10.1

src: hdf5-1.10.1.tar.bz2 $ export CPP=/usr/bin/cpp $ apt-get install g++ $ ./configure --enable-fortran --with-zlib=/share/tools/bin/zlib/1.2.8/gcc/lib --prefix=/share/tools/bin/hdf5/1.10.1/gcc >& run_configure.log $ make >& make.log $ make check >& make_check.log $ make install >& make_install.log

1.- libcurl-7.57.0

src: https://curl.haxx.se/download/curl-7.57.0.tar.gz $ ./configure --prefix=/share/tools/bin/curl/7.57.0/gcc >& run_configure.log $ make >& make.log $ make check >& make_check.log $ make install >& make_install.log

2.- netcdf-4.5.0

src: ftp://ftp.unidata.ucar.edu/pub/netcdf/netcdf-4.5.0.tar.gz $ apt-get install curl $ export LDFLAGS='-L/share/tools/bin/zlib/1.2.8/gcc/lib -L/share/tools/bin/hdf5/1.10.1/gcc/lib -L/share/tools/bin/curl/7.57.0/gcc/lib' $ export CPPFLAGS='-I/share/tools/bin/zlib/1.2.8/gcc/include -I/share/tools/bin/hdf5/1.10.1/gcc/include -I/share/tools/bin/curl/7.57.0/gcc/include' $ ./configure --enable-netcdf-4 --prefix=/share/tools/bin/netcdf/4.5.0/gcc >& run_configure.log $ make >& make.log $ make check >& make_check.log $ make install >& make_install.log

Fortran interdace

src: ftp://ftp.unidata.ucar.edu/pub/netcdf/netcdf-fortran-4.4.4.tar.gz $ export LD_LIBRARY_PATH=-I/share/tools/bin/netcdf/4.5.0/gcc/lib:${LD_LIBRARY_PATH} $ export LDFLAGS='-L/share/tools/bin/zlib/1.2.8/gcc/lib -L/share/tools/bin/hdf5/1.10.1/gcc/lib -L/share/tools/bin/curl/7.57.0/gcc/lib -L/share/tools/bin/netcdf/4.5.0/gcc/lib' $ export CPPFLAGS='-I/share/tools/bin/zlib/1.2.8/gcc/include -I/share/tools/bin/hdf5/1.10.1/gcc/include -I/share/tools/bin/curl/7.57.0/gcc/include -I/share/tools/bin/netcdf/4.5.0/gcc/include' $ ./configure --prefix=/share/tools/bin/netcdf/4.5.0/gcc >& run_configure.log $ make >& make.log $ make check >& make_check.log $ make install >& make_install.log